Table of Contents

1. Introduction

2. History and Evolution of Ethereum Sharding

2-1. Early Ethereum sharding efforts: Hypercubes, Hub and Spoke Chains, Super Quadratic Sharding, Quadratic Sharding

2-2. The pursuit of simplicity and pragmatism: Full execution sharding → Data sharding

3. Danksharding

3-1. Danksharding, a new blockchain architecture for data sharding

3-2. Minimizing centralization: PBS (Proposer Builder Separation) and crList

3-3. Ensuring scalability and trust: DAS, Erasure Coding, and KZG Commitments

4. EIP-4844: Proto-Danksharding

4-1. EIP-4844, the cornerstone of Danksharding

4-2. Simply reducing calldata cost can cause block size issues

4-3. Structure and creation of blob transactions

5. Impact of EIP-4844 on Rollup Costs

5-1. DA (L1 publication) costs currently account for over 90% of total rollup costs

5-2. With the implementation of EIP4844, the DA cost of rollups is expected to be almost free, and a significant increase in the blob fee would require the demand for rollups to grow by more than 10 times

5-3. Changing the cost structure of blobs is being actively discussed within the Ethereum community

6. Closing Thoughts

Annotation) Types of Ethereum data storage

A1. The main storage spaces in EVM are categorized as Storage, Memory, Stack, and Calldata

A2. The space used by rollups is called Calldata

1. Introduction

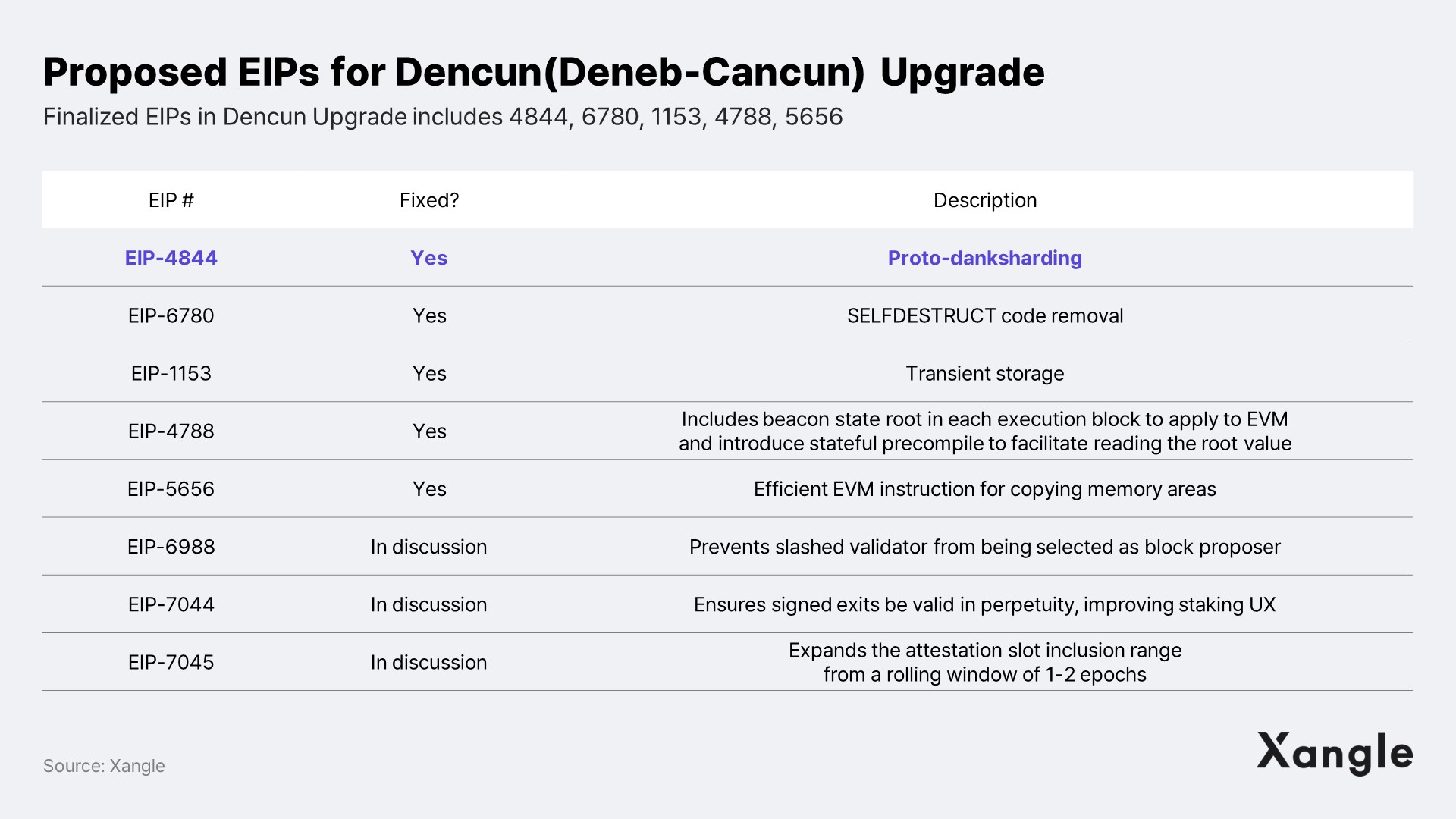

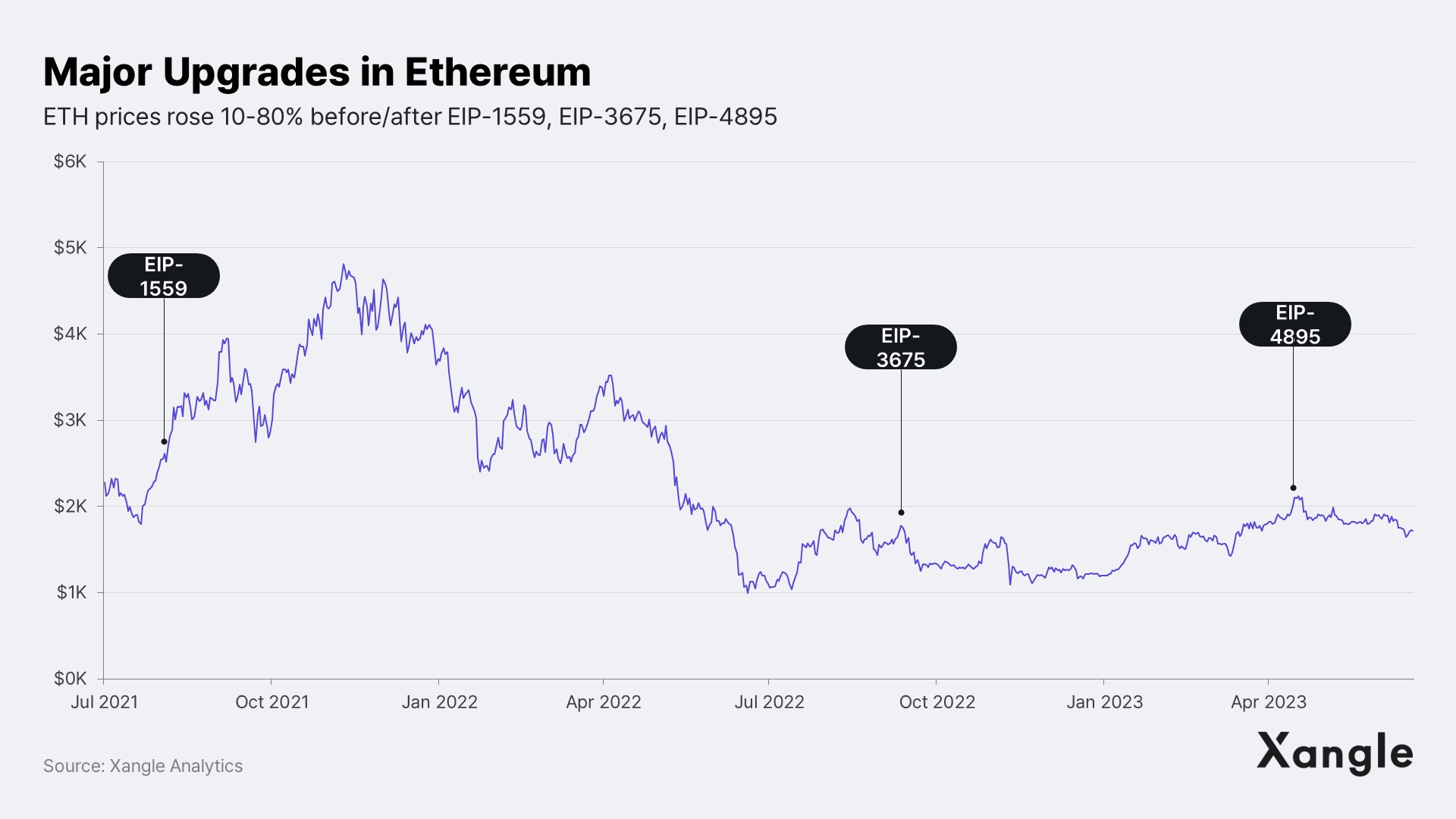

Alphas are hidden in EIPs. In a month before and after the EIP-1559 (London), EIP-3675 (The Merge), and EIP-4895 (Shanghai) upgrades, ETH prices rose 10% to 80%. Now, the next upgrade is the Deneb-Cancun-combined Dencun hard fork, scheduled for the end of this year. And the most noted upgrade in Dencun is the EIP-4844, a.k.a. Proto-Danksharding, the very first step toward implementing Ethereum’s sharding roadmap and a proposal that will dramatically reduce the operational cost of rollups.

This report is set up in two parts. The first follows in the footsteps of Ethereum sharding, exploring how the history of changes in sharding and Ethereum’s long-term vision of Danksharding. And in the second part, we'll look at the structure and implications of Proto-Danksharding and envision how the economic structure of rollups will change after EIP-4844.

2. History and Evolution of Ethereum Sharding

2-1. Early Ethereum sharding efforts: Hypercubes, Hub and Spoke Chains, Super Quadratic Sharding, Quadratic Sharding

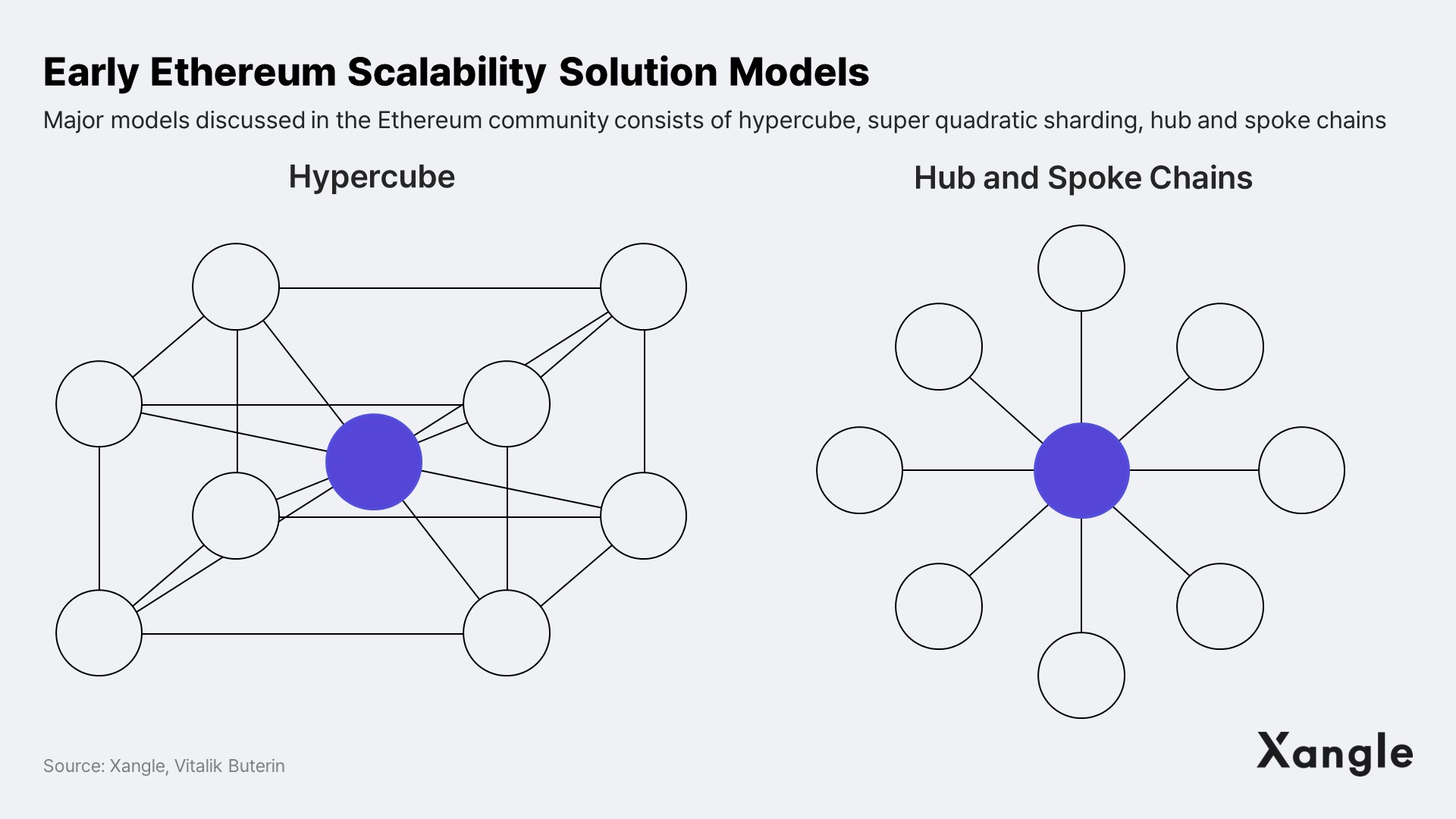

In retrospect, the scalability solutions proposed by the nascent Ethereum community such as Hypercubes, Super Quadratic Sharding, and Hub and Spoke Chains were notably audacious and ahead of their time. In particular, hypercube and the hub and spoke chains were proposed by Vitalik in late 2014, before the Ethereum mainnet was even launched. (see “Scalability, Part 2: Hypercubes”). While hub and spoke chains were an early form of today’s Polkadot Relay Chain-Parachain structure, hypercube emerged as an answer to hub and spoke chains; flaws. Hypercube's advantage is improved transaction speed via cross-substate messaging. The model unfortunately was not adopted due to its many attack vectors and complex implementation, but these ideas were later vital in shaping the quadratic sharding* model that appeared on the ETH2 roadmap. Sharding on Ethereum has since undergone three major shifts that made the sharding model we know today.

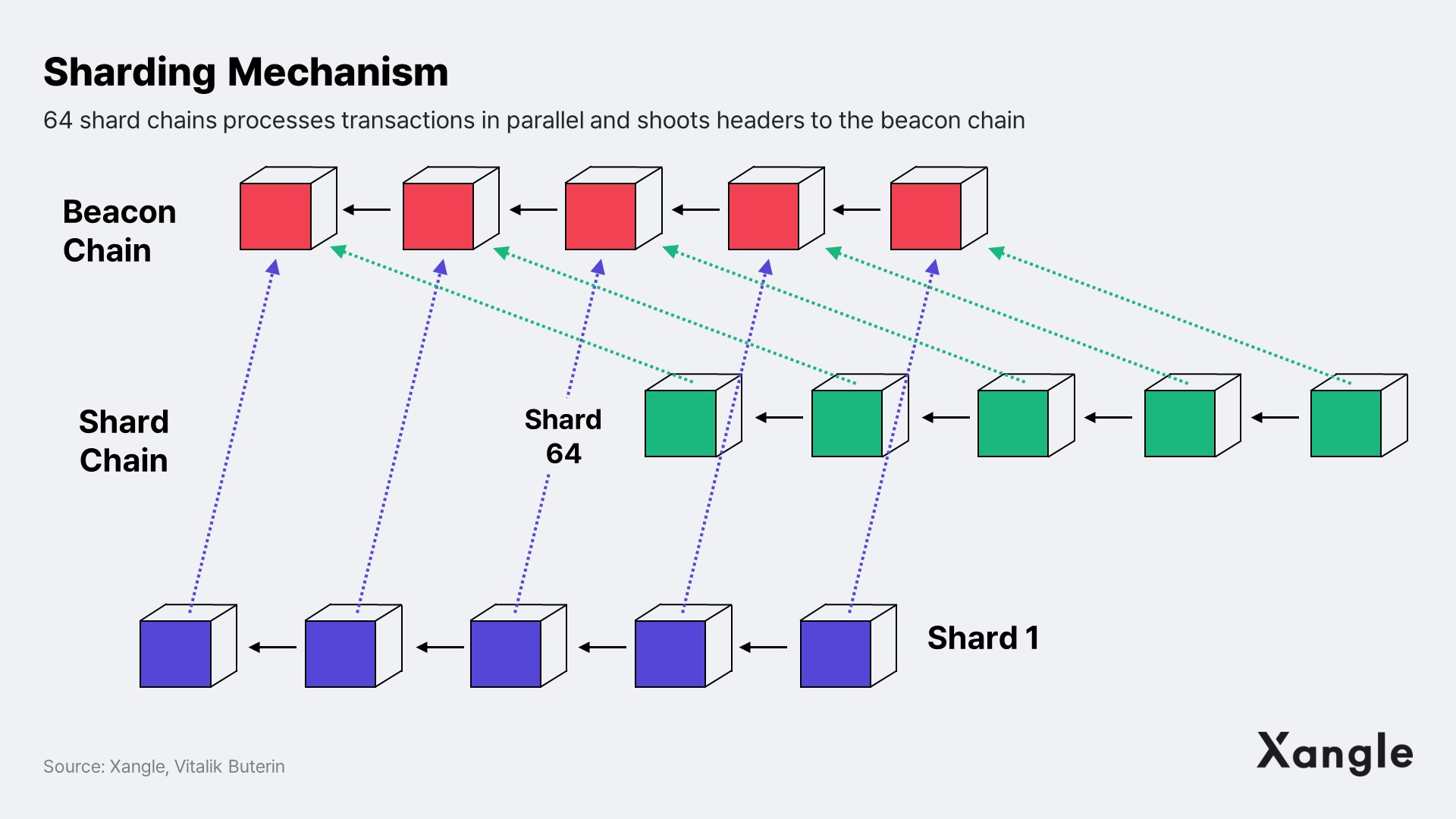

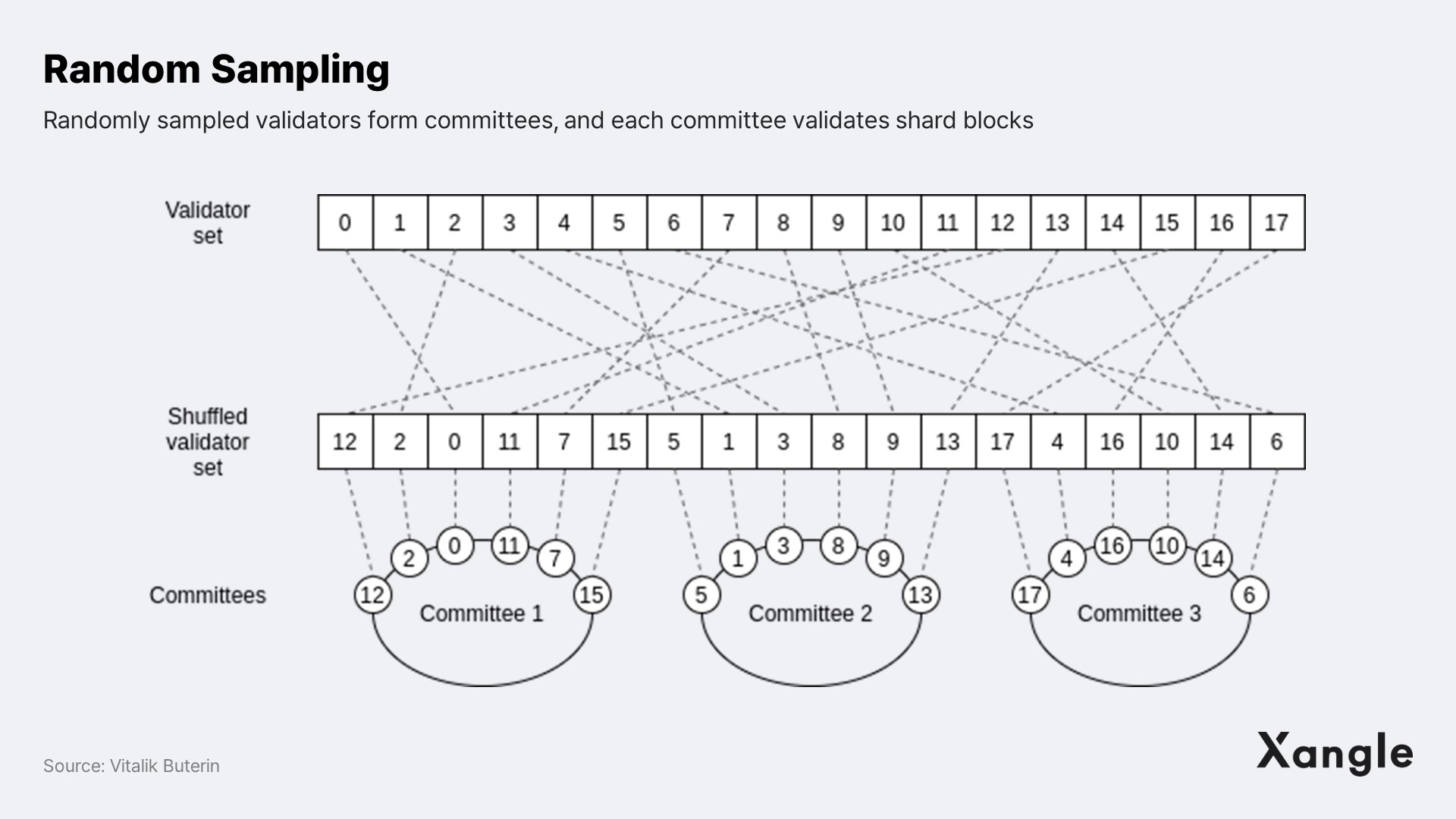

*Quadratic sharding: The blockchain is separated into a beacon chain and a chain of 64 shards, and each shard processes transactions in parallel and shoots headers to the beacon block. Each beacon block contains transactions for all 64 shards, and each shard block is validated by 64 committees composed of Ethereum's validators. The committees are randomly assembled through a process called random sampling (see “Sharding: The Future of the Ethereum Blockchain”).

A significant shift transpired between late 2017 and early 2018 as the roadmap for Serenity, or ETH2, started to solidify. Decisively, complex theories of super quadratic and exponential sharding were set aside in favor of first implementing quadratic sharding. Super quadratic sharding adds shards upon shards, much like fractal scaling. This redirection enabled Ethereum to concentrate its R&D efforts solely on quadratic sharding. A clear indication of this strategic shift can be found in Justin Drake's "Sharding Phase 1 Spec" from March 2018, where super quadratic sharding is slated for Phase 6, the ultimate stage.

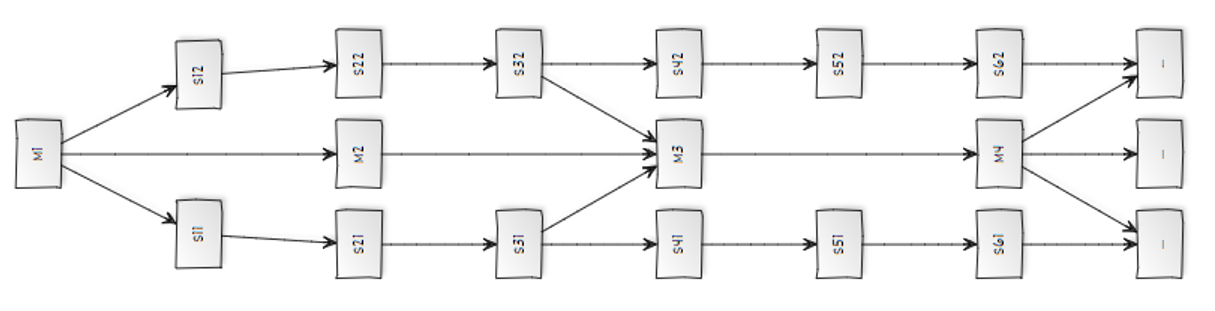

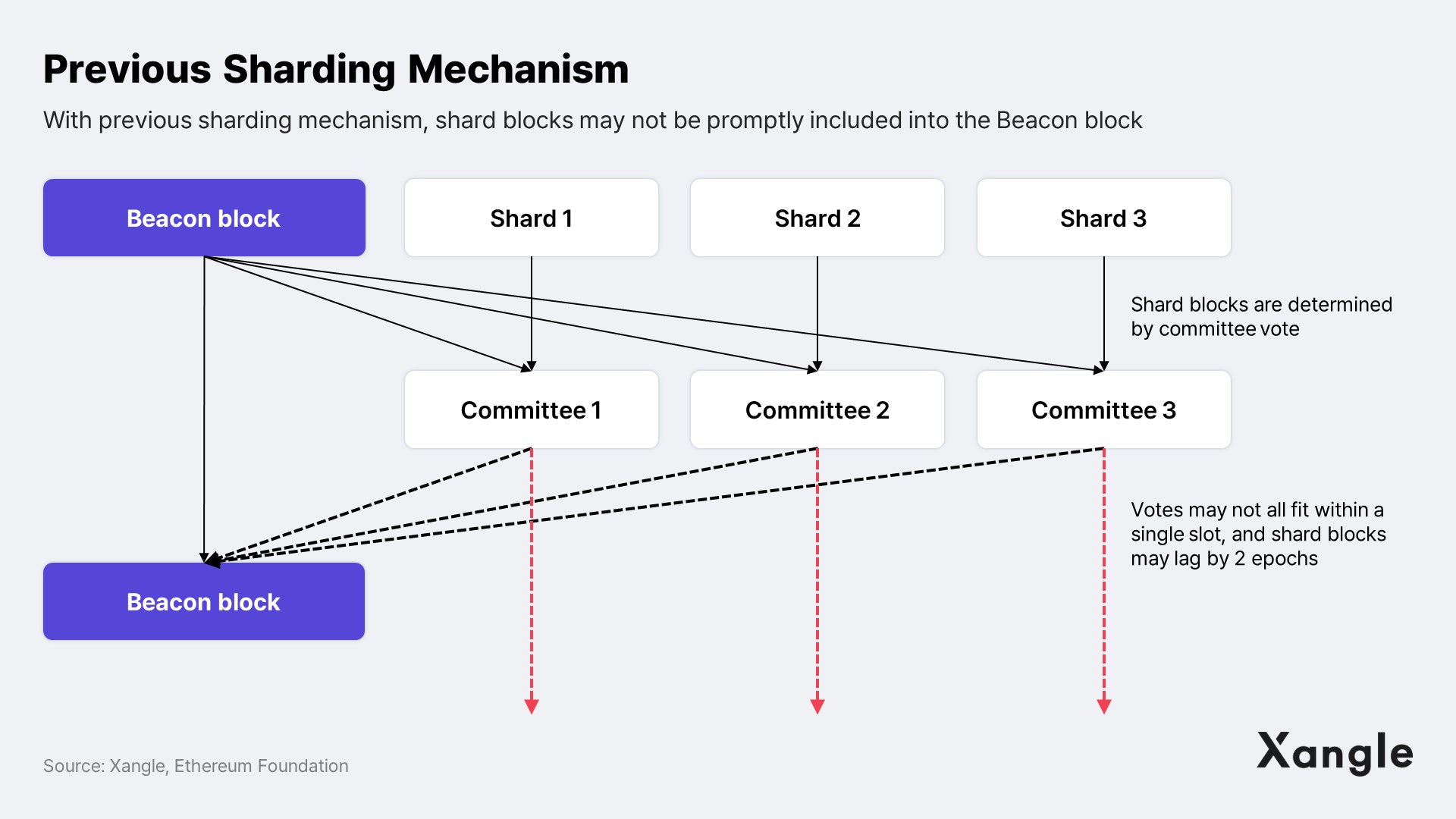

The second pivotal shift took place in the latter half of 2019, when the development of *Crosslinks** was set aside, transitioning instead to a unilateral delivery of shard blocks to the Beacon Chain (as documented on Github). Initially, the crosslinks were designed to interconnect the Beacon and Shard chains, facilitating communication between them, as depicted in the figure below (where 'M' represents the main chain or Beacon chain, and 'S' stands for the Shard chain). However, by discarding this approach, the focus needed to be only on the transaction flow from shard chain to the Beacon chain. Simply put, the previous model required crosslinks for interchain connectivity, a requirement eliminated in the redefined structure.

*Crosslink: A signature of a beacon chain validators or committee that has validated a shard chain block, used by the beacon chain to verify the latest state of the shard chain or to interact with the shard chain.

Chain cross-linking, Source: Vitalik Buterin

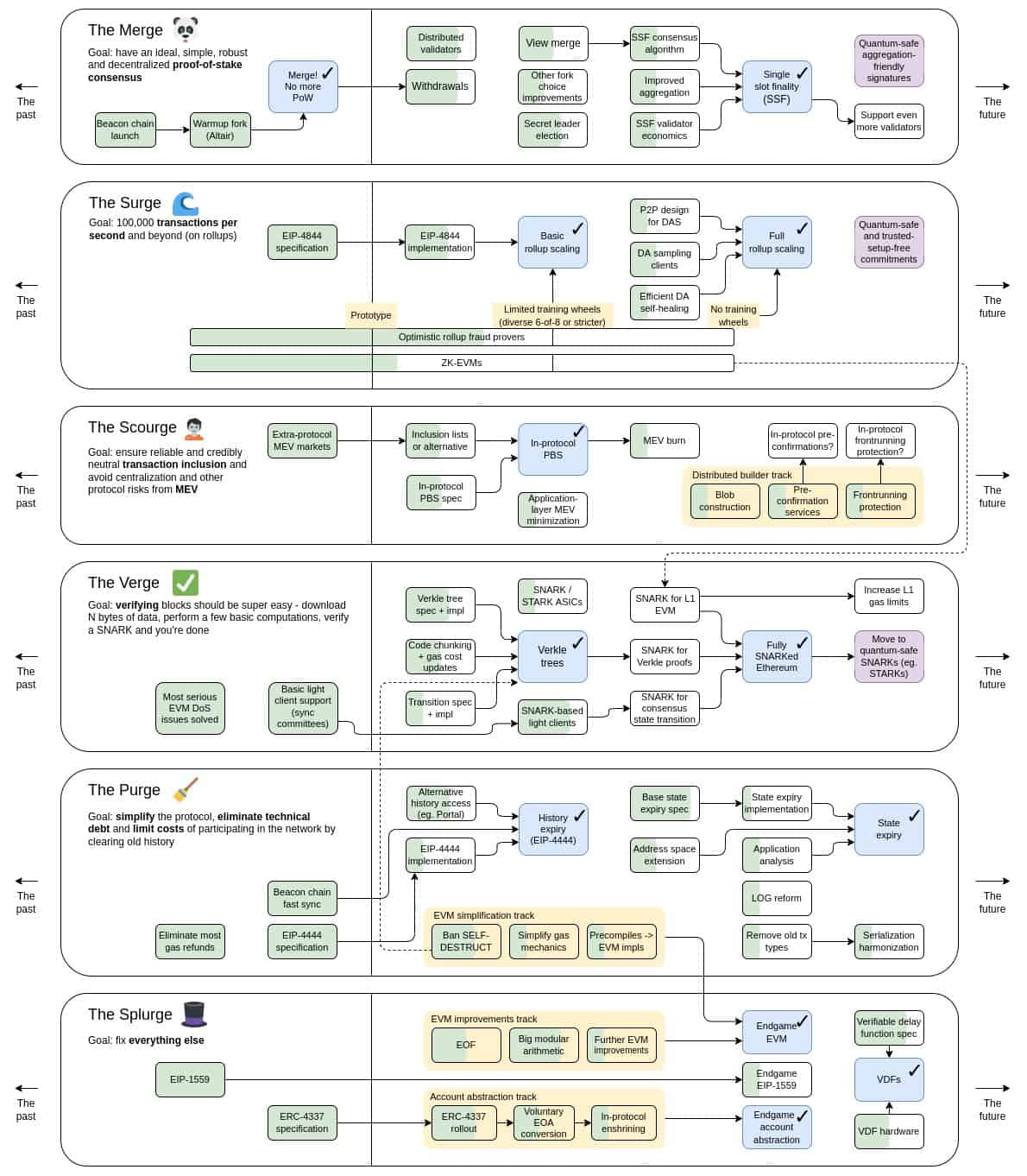

The last major shift arrived in 2020 with Vitalik's unveiling of the "Rollup-centric ethereum roadmap." As the phrase "rollup-centric roadmap" implies, the role of rollups in the Ethereum ecosystem has expanded significantly ever since. The idea was to assign rollups the task of transaction execution while using shards merely for data availability. This marked Ethereum's strategic move from execution sharding to data sharding.

Ethereum Roadmap, Source: Vitalik Buterin

2-2. The pursuit of simplicity and pragmatism: Full execution sharding → Data sharding

The trajectory of Ethereum's sharding can be characterized as a persistent pursuit of reduction and simplification. From Ethereum's inception, sharding was consistently proposed as a scalability solution. However, given its technical complexity and implementation challenges, the concept of sharding gradually pivoted toward practicality and simplicity. Naturally, it evolved from full execution sharding, where each shard processed all transactions, to data sharding, where shards merely store transaction data executed by the rollups.

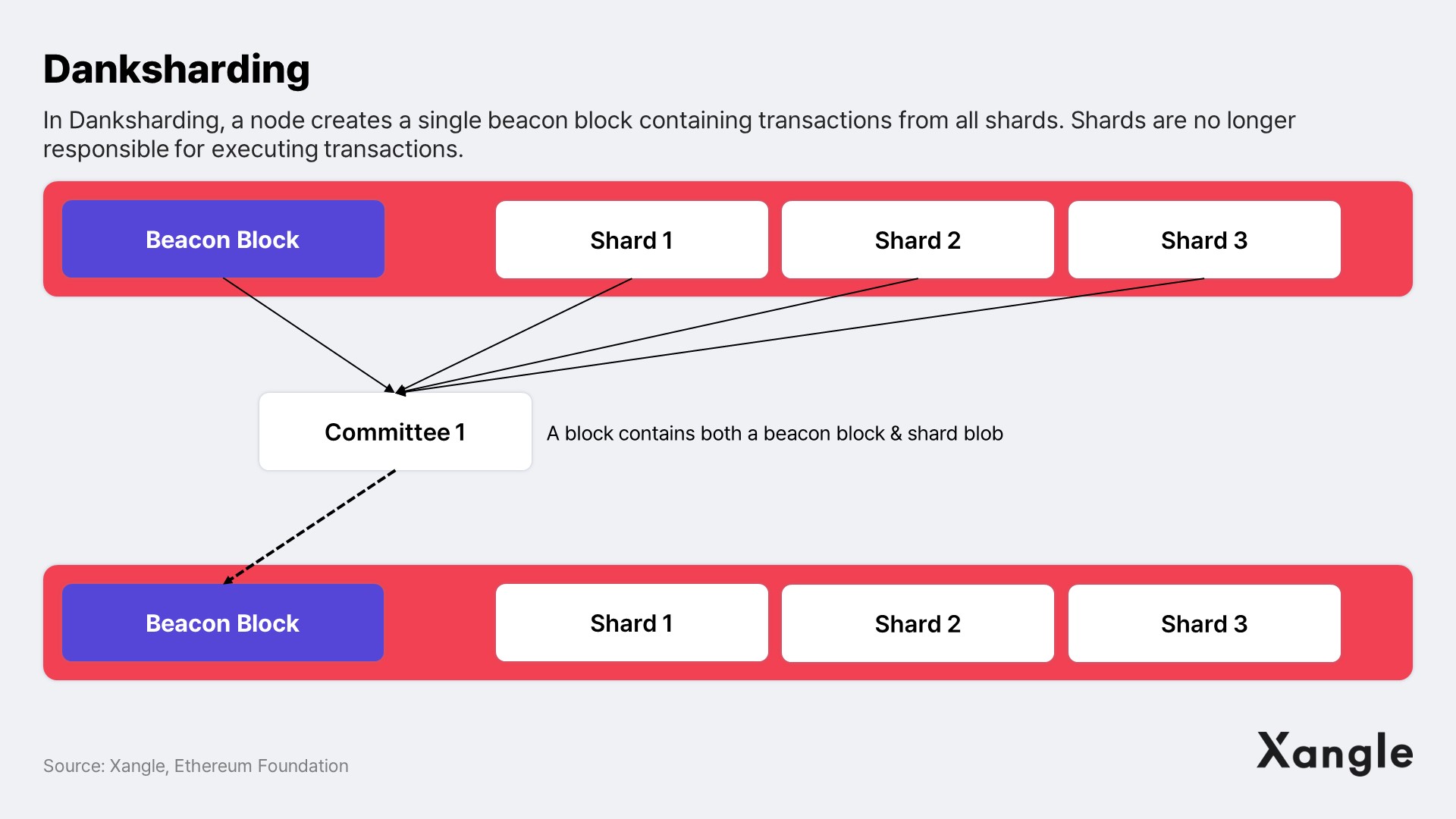

The revised roadmap seeks to use the shard chain solely as a data availability layer for rollups, while tasking rollups with transaction execution. The consensus layer's role here is to guarantee the data availability of shards. To effectuate this, Dankrad Feist, a researcher at the Ethereum Foundation, proposed in his December 2021 article "New sharding design with tight beacon and shard block integration," a strategy for a single block builder to generate a beacon block encapsulating all transactions from each shard. At the same time, he also introduced Proposer Builder Separation (PBS) to decouple the roles of proposer and builder, thereby mitigating centralization in the block creation process. This proposal resonated with the Ethereum community and was integrated into the official Ethereum roadmap, leading to the birth of Danksharding.

3. Danksharding

3-1. Danksharding, a new blockchain architecture for data sharding

In ETH2 sharding, Ethereum validators are randomly sampled to form committees, which take turns simultaneously creating and validating 64 shard blocks. However, the absence of data availability sampling (DAS) necessitates validators to manually store the entire shard data and provide proof of its availability. This system relies on the assumption that all nodes are honest, as there is no way to verify if a particular validator deliberately withholds data. Moreover, the committee must manually tally each node's vote for shard blocks, which can cause time delays and hinder timely inclusion of shard blocks in the beacon block. As such, the earlier sharding methods were quite complex to implement and carried many risks.

In Danksharding, each node produces one large block containing all shard blobs*, which is then validated and voted on by a committee. This effectively solves the previously mentioned problem of shard blocks not being promptly included in beacon blocks. Centralization of the block production process arising from the structure of Danksharding can be minimized through the introduction of Proposer Builder Separation (PBS). PBS is a method to encode the principle of "centralized block production, decentralized block validation" that Vitalik laid out in Endgame.

*Blob: Short for Binary Large Objects, Blob is a new data type that is stored only in the Beacon Chain. The concept of blobs was first introduced alongside Danksharding and is expected to be used primarily for storing rollup data.

3-2. Minimizing centralization: PBS (Proposer Builder Separation) and crList

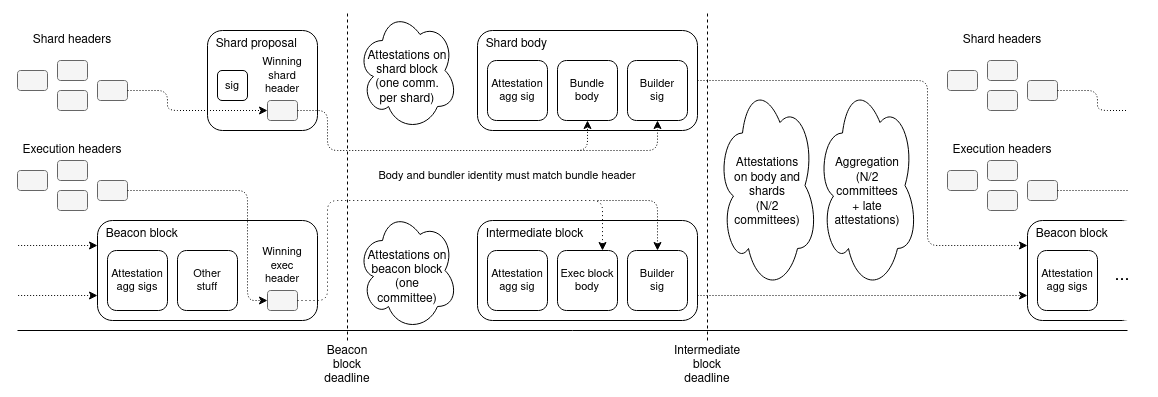

Initially outlined in "Proposer/Block Builder Separation-friendly Fee Market Designs," PBS was primarily developed to decentralize MEV. However, its design and principles meshed well with the Danksharding architecture, leading to its subsequent integration within that structure.

MEVs are predominantly leveraged by a small number of powerful nodes that have the HW to update the mempool swiftly and resources to develop advanced MEV algorithms. Basically, PBS revolves around the idea of splitting the role of a block producer into a proposer and a builder, offering a chance for nodes with lower computing power and fewer resources to obtain MEV. Builders are powerful validators capable of developing advanced MEV algorithms and responsible for ordering transactions and creating blocks. Proposers, on the other hand, have the power to decide which of the blocks proposed by builders will be recorded on the chain.

With PBS, even the most computationally powerful nodes will have to share MEV gains with proposers. This stands in contrast to the previous process, whereas previously a single validator had a monopoly on transaction inclusion and block generation. In this process, the proposer receives the bid amount, and the builder receives the MEV, minus transaction fees and the bid amount.

It's important to note that during the bidding process, builders publish only the block header and the desired bid price first, instead of the entire block data. Releasing the full block data upfront could allow other builders to copy the transaction and take the MEV. This is why the full block data is only released after the proposer has selected the block.

Two-slot PBS architecture | Source: Two-slot proposer/builder separation

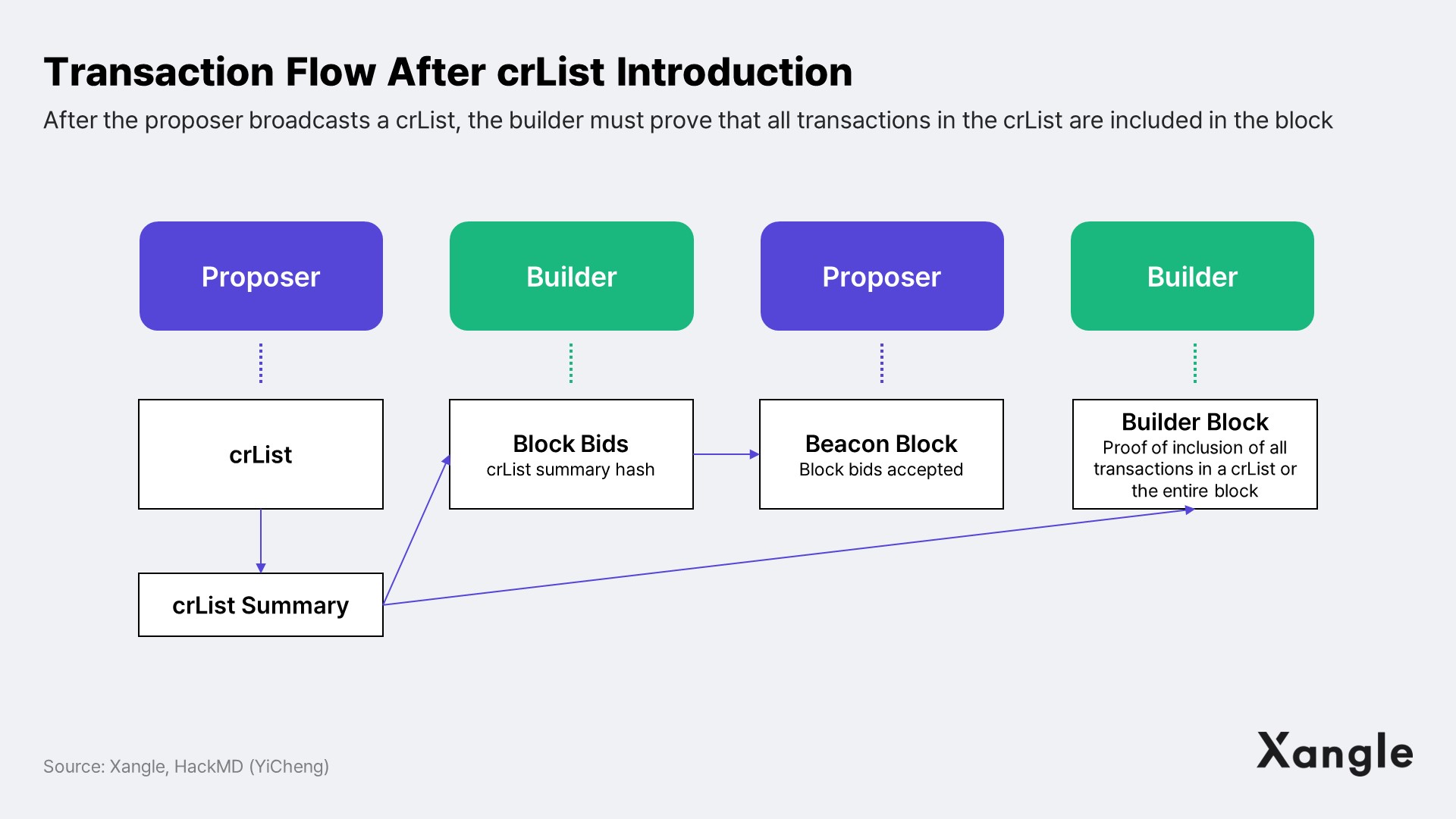

One problem with PBS is that it delegates block creation authority to builders, potentially compromising censorship-resistance. To address this, the Ethereum community is planning to introduce the Censorship Resistance List (CrList). Once CrList is implemented, the transaction flow is expected to be as follows:

- A proposer broadcasts a crList containing all valid transactions in their mempool to a builder.

- The builder constructs a block based on the transactions in the crList and submits it to the proposer. In doing so, the builder includes a transaction hash in the block body that proves that it contains all the transactions from the crList.

- The proposer selects the block with the highest bid, constructs the block header, and notifies the nodes.

- The builder submits a block and a proof that it has included all transactions from the crList.

- The block is added to the chain. If the builder does not submit a proof, the block will not be accepted by the fork choice rule.

3-3. Ensuring scalability and trust: DAS, Erasure Coding, and KZG Commitments

In addition to PBS and crList, the secure and efficient implementation of Danksharding requires the preemptive introduction of several other technologies, most notably: 1) Data Availability Sampling (DAS), 2) Erasure Coding, and 3) KZG Commitment.

First, DAS addresses the potential centralization and scalability issues that can arise with the introduction of Danksharding. With the growing adoption of Ethereum, not only the number of rollups but also the amount of data these rollups need to process will grow, resulting in an exponential increase in the amount of data nodes need to store. This hinders scalability and leads to centralization as only a few nodes capable of handling such rapidly increasing data volume will survive (also known as the data availability problem). DAS ensures data availability (DA) and reduces the burden of large data on nodes, encouraging more nodes to join the network. This is also why it is more secure and reliable than other decentralized file systems, e.g., BitTorrent and IPFS, which facilitate data upload but do not guarantee DA.

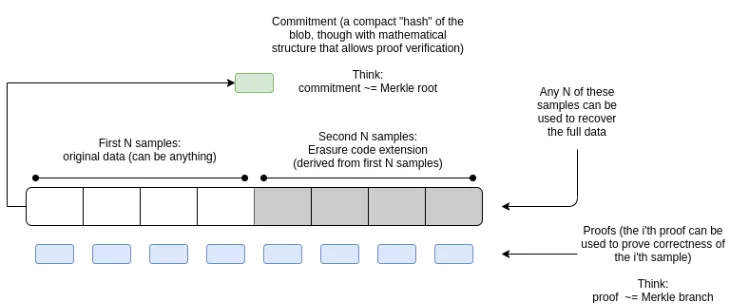

With DAS, nodes only verify if at least 50% of the data in blobs are available without downloading all the data. DA is guaranteed if the availability exceeds 50%, the secret of which is erasure coding using the Reed-Solomon Codes. The essence of erasure coding is that even if 50% of the original data is lost, the entire data can be recovered using the remaining 50% of the data by doubling the data in a certain way based on the original data. Vitalik Buterin writes in An explanation of the sharding + DAS proposal that the simplest mathematical analogy to understand erasure coding works is the idea that “two points are always enough to recover a line.” For example, if a file consists of four points (1, 4), (2, 7), (3, 10), and (4, 13) on one line, then any two of those points can be used to reconstruct the line and compute the remaining two points. This assumes that the x coordinates 1, 2, 3, 4 are fixed parameters of the system and are not the file creator’s choice. Extending this idea and using higher degree polynomials, 3-of-6 files, 4-of-8 files, and generally n-of-2n files for arbitrary n can be created. These files have the property that even with arbitrary n points, the remaining missing points out of 2n can be computed. That is, even if only 50% of the erasure-coded data remains, the entire data can be reconstructed. However, there must be safeguards in place to ensure that erasure coding is done properly. If you fill a blob with garbage data instead of the original data, the block will be irrecoverable. This is where the KZG commitment comes into play.

Erasure coding mechanism | Source: An explanation of the sharding + DAS proposal

Similar to the proof systems in rollups, there are two ways to verify erasure coding: fraud proof and zero-knowledge proof. While Celestia, a layer-1 blockchain built with the Cosmos SDK that provides data availability, will use fraud proofs to ensure the integrity of erasure-coded data, the Ethereum community has decided to use a zero-knowledge proof system, specifically the 2D KZG commitment. With 2D KZG commitment, the entire data can be recovered with a probability of 1 in 75 iterations of DAS. However, like SNARKs, it requires an initial trusted setup process, making the solution less than perfect. KZG commitments rely on a randomized value called a CRS (Common Reference String), determined during the initial setup phase. If a prover or proof creator randomly assigns this value, they can create a fraud proof that shows a true result even if they don't actually have the value. As a result, some in the Ethereum community are proposing using 2D KZG commitments for now and switching to STARK proofs in the future. For more details, see KZG Polynomial Commitments by Dankrad Feist.

4. EIP-4844: Proto-Danksharding

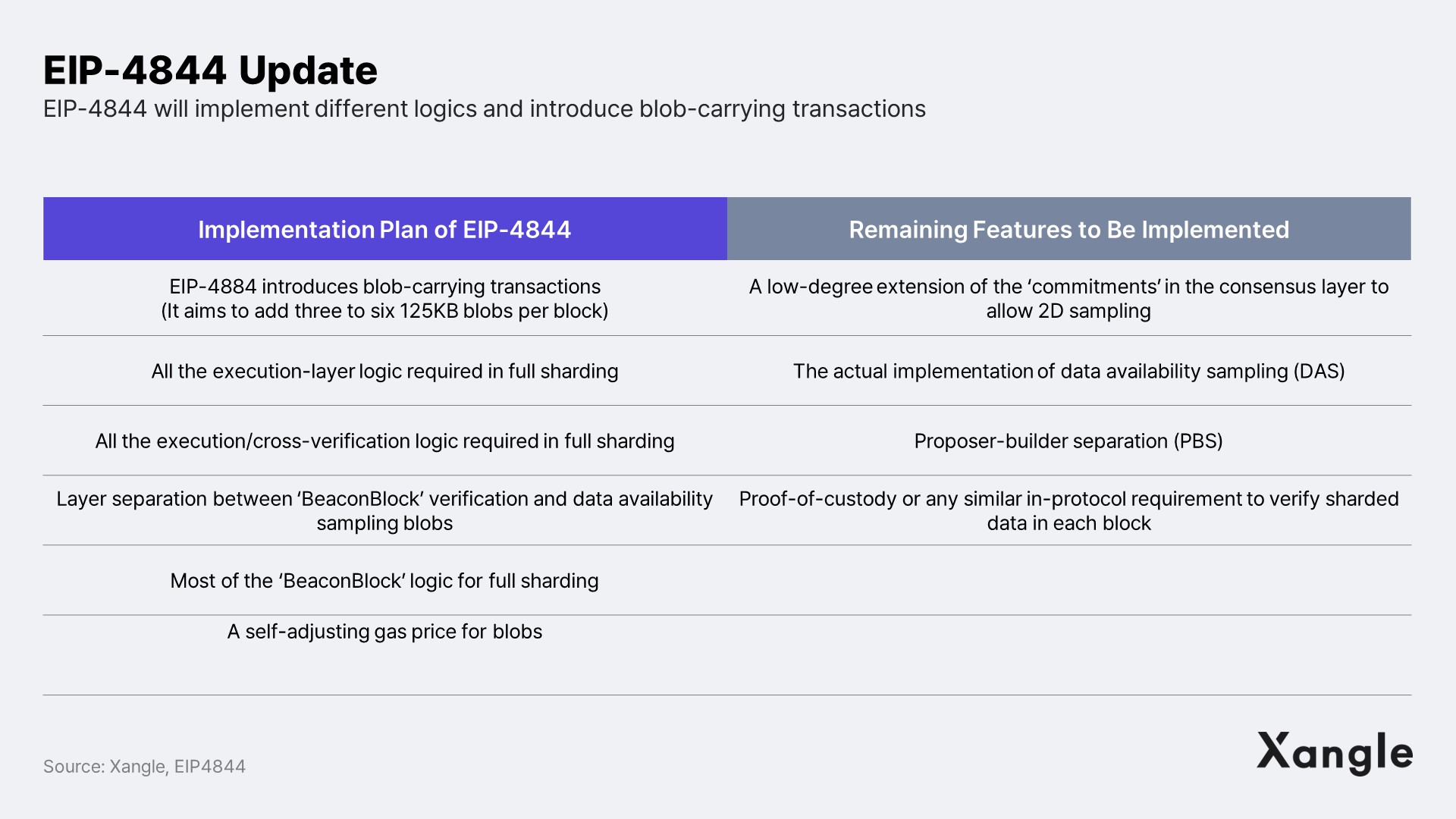

Though the exact specification of Danksharding has yet to be discussed further, and its actual deployment is expected to be years away, the Ethereum community has made the proactive decision to incorporate some of the parameters of Danksharding, such as EIP-4844, into the protocol during the upcoming Dencun upgrade.

4-1. EIP-4844, the cornerstone of Danksharding

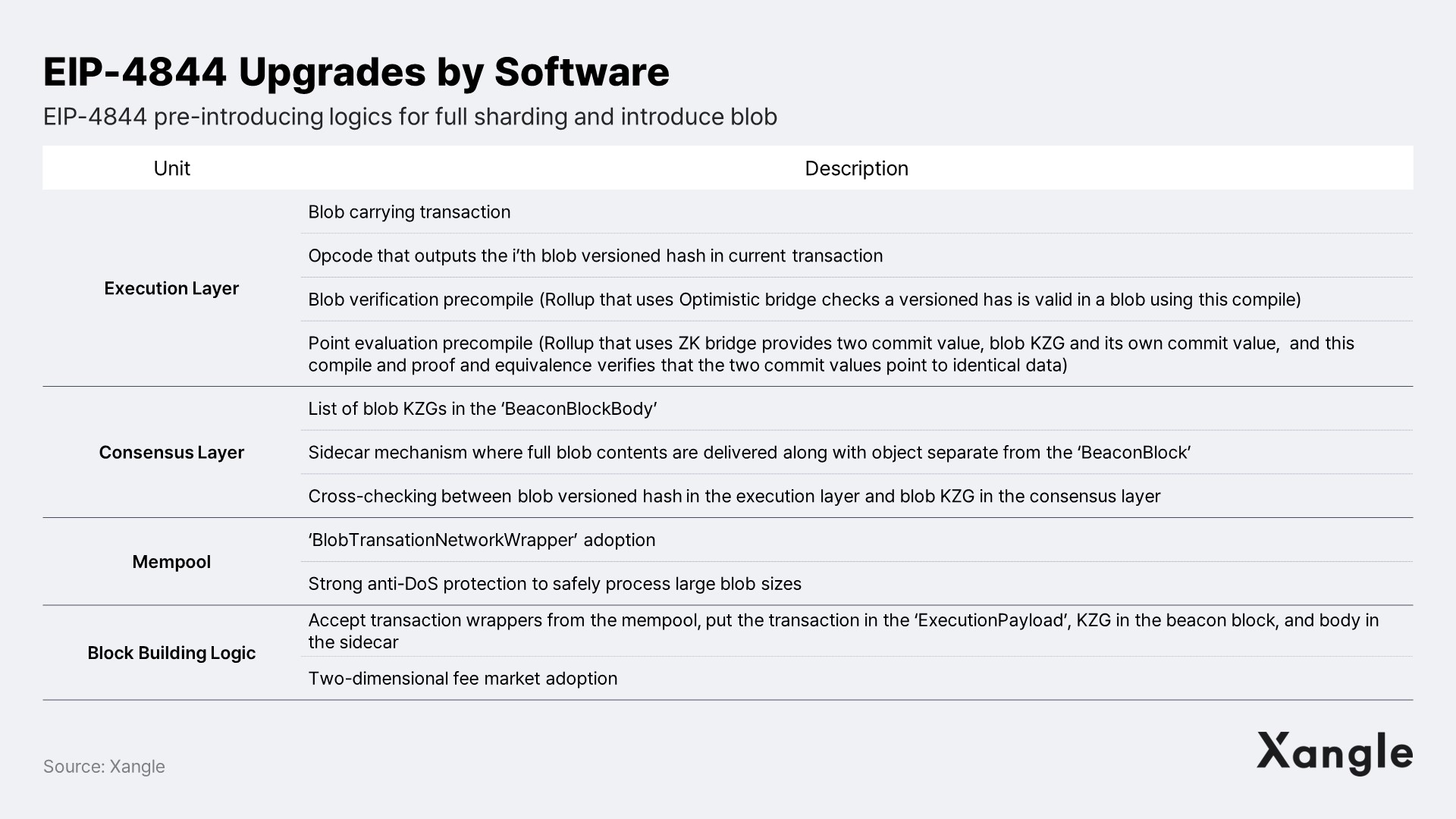

Despite its name, Proto-Danksharding, EIP-4844 does not actually shard the Ethereum database. Instead, EIP-4844 aims to 1) pre-introduce some of the logic required for a smooth transition of the protocol architecture to Danksharding in the future, and 2) introduce blob carrying transactions.

Blobs, the centerpiece of EIP-4844, stand for Binary Large Objects. Put simply, they are chunks of data attached to a transaction. Unlike regular transactions, blob data is only stored on the Beacon Chain and incurs very low gas fees. The purpose of blobs is to dramatically reduce the cost of DA (L1 publication) of rollups by creating a storage space exclusively dedicated to data availability, independent of blockspace. Currently, all rollups use the calldata space to write their data to Ethereum, which is expected to be replaced with blobs. For more information about Ethereum's data storage and why rollups currently use calldata, see annotation below.

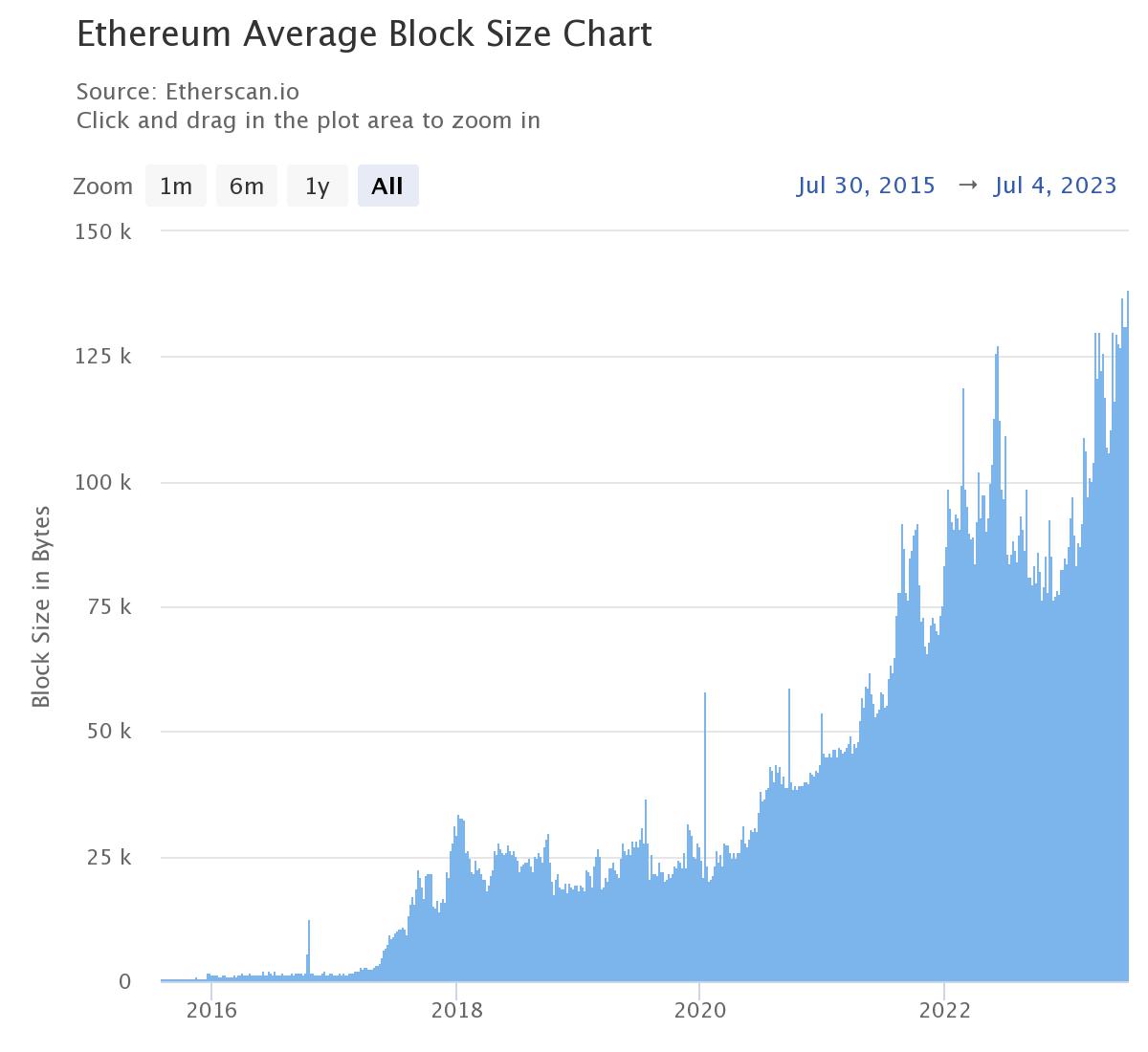

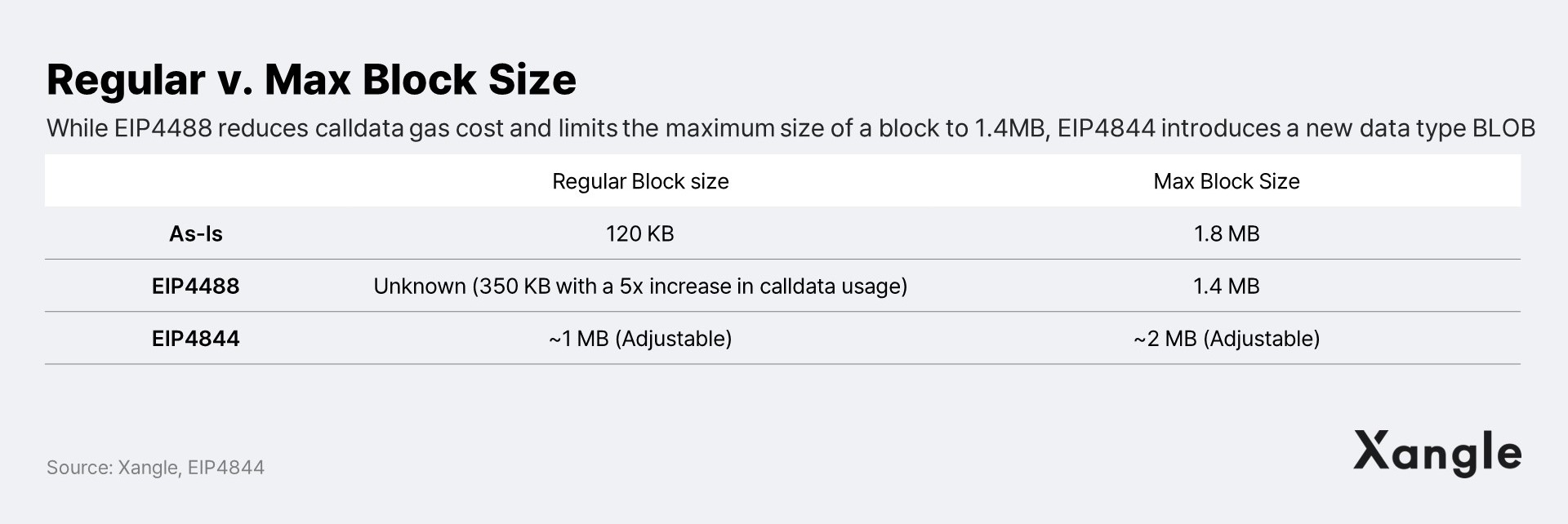

4-2. Simply reducing calldata cost can cause block size issues

The idea of reducing the DA cost of rollups has been tossed around since before EIP-4844. Initially, it aimed to simply reduce the calldata fee (gas fee), but it was quickly dismissed due to block size issues. Suppose we want to reduce calldata gas fees to one-tenth. Currently, the average block size on Ethereum today is 120KB, with a theoretical maximum size of around 1.8MB (assuming all 30M gas is used for calldata). If we were to reduce the cost of calldata by a factor of 10, the average block size would still be manageable, but the maximum block size would be 18MB, far beyond what the network can handle. In other words, simply reducing the cost of calldata gas can seriously bloat the network.

Source: etherscan

A refinement of the above idea is EIP4488, which was proposed in November 2021. EIP4488 aims to reduce the calldata gas cost from 16 gas per byte to 3 gas per byte, while enforcing a maximum block size of 1.4MB.

The main concept behind EIP4488 is to boost rollup usage by lowering calldata costs by 5.3 times, while simultaneously avoiding excessive block size growth through a hard cap on the maximum block size.

EIP-4844, on the other hand, creates a new data type called blobs and introduces a fee market for them, which helps lower the DA (l1 publication) cost of rollups. While the implementation of EIP-4844 may take longer than EIP4488, which has an intuitive and simple logic facilitating a faster deployment process, EIP-4844 provides the advantage of introducing necessary updates for Danksharding in advance. Upon passing EIP-4844, execution clients will be ready for Danksharding, requiring only consensus clients to upgrade over time—which is another reason EIP-4844 was adopted.

4-3. Structure and creation of blob transactions

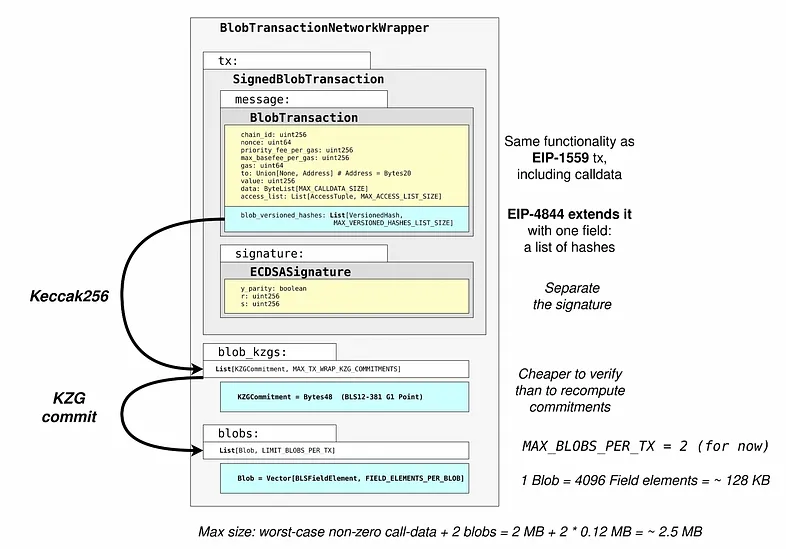

Now, let's delve deeper into blobs. Each blob consists of 4,096 fields, with each field containing 32 bytes, resulting in a blob size of 125KB*. According to the updated EIP-4844 document, an average of 3 blobs will be added to each block, with a maximum of 6 blobs, suggesting that the blob size per block will be between 375KB and 750KB. Upon the introduction of Danksharding, the target or maximum number of blobs will increase to 128 and 256, respectively, allowing for a blob space of 16MB to 32MB.

*In fact, 125KB is in no way a small amount of data. Assuming 1 byte per character and an average of 6 characters per word, 125KB is enough to hold about 21,000 English words. According to ChatGPT, 21,845 English words would fill about 77 A4 sheets (font Arial, size 10, line spacing 2).

Source: Deep in to EIP-4844, hackmd(@Yicheng-Chris)

In the BlobTransactionNetworkWrapper, which holds the data of a blob carrying transaction, there are three main components: 1) SignedBlobTransaction, comprising the message and signature, 2) blob_kzg, holding the blob commitment, and 3) blob, containing the blob data.

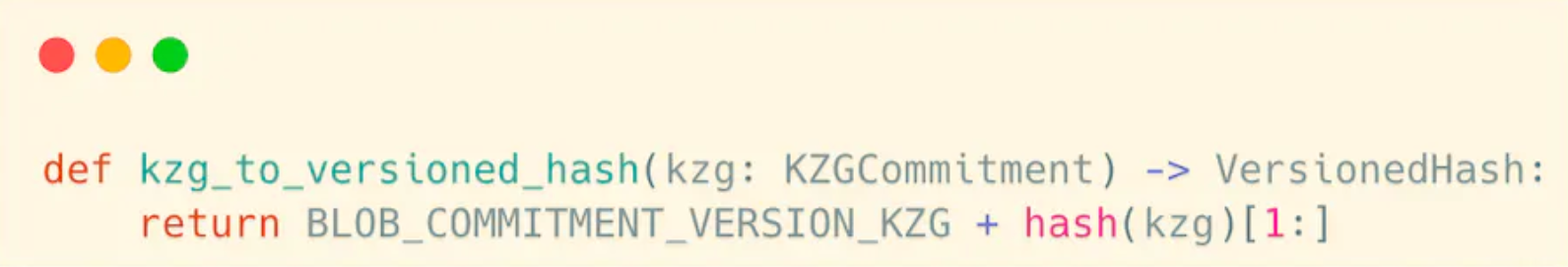

If you look at the message field in the Signedblobtransaction, you will find that a field called blob_versioned_hashes is added to EIP1559 transactions. blob_versioned_hashes is 0x01 (a 1-byte value representing the version) plus the last 31 bytes of a 32-byte SHA256 hash of the KZG commitment of the blob data. The KZG commitment is a summary of the blob data. The reason for hashing the 48-byte KZG commitment once more to 32 bytes is for forward compatibility* with the EVM.

*The EVM uses a stack-based architecture, where the word size, representing the size of a data element on the stack, is 32 bytes or 256 bits.

Source: miror.xyz(@yicheng.eth)

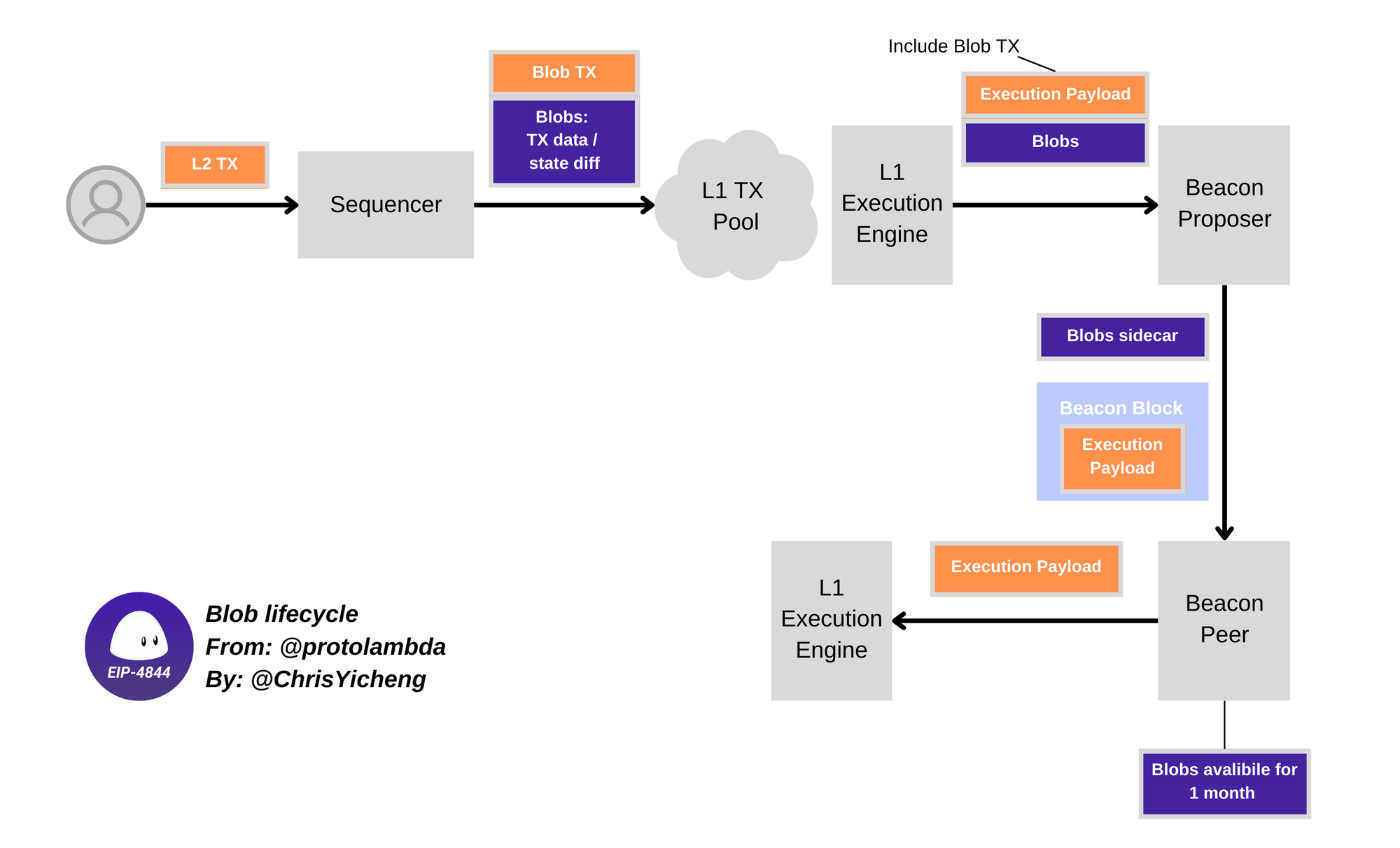

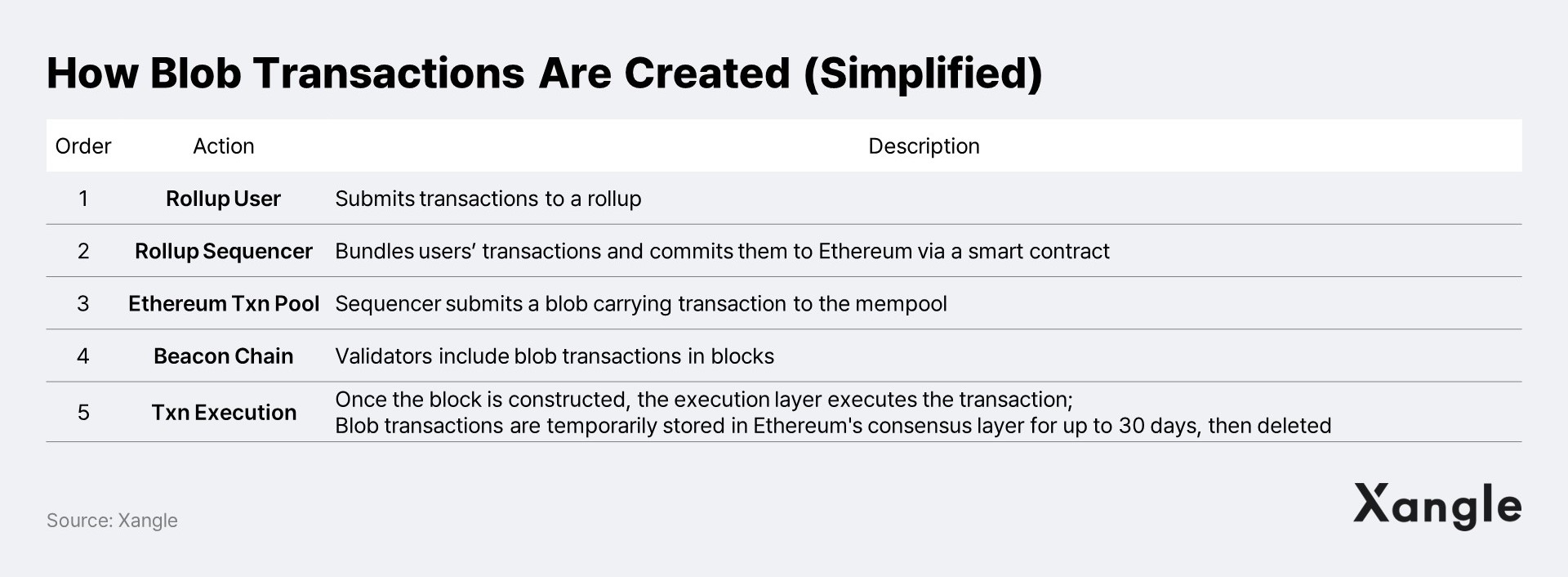

So, why are blob_versioned_hashes included in the transaction in the first place? This can be answered by looking at the creation process of a blob transaction, which will also explain why blob_kzg and blob are separate from Signedblobtransaction (see figure below).

Here’s how a blob transaction is created. First off, rollup users submit transactions and a sequencer collects them and send to the L1 transaction mempool. Subsequently, the Ethereum proposer propagates the blob transaction in the mempool in two parts to the nodes: the Signedblobtransaction as the Execution Payload and the blob_kzg and blob as the Sidecar. The Execution Payload, which contains the actual transaction information, can be downloaded from the execution client (L1 execution engine), and the Sidecar from the consensus client. Notably, the blob data is only stored in the Beacon Chain—meaning that the EVM cannot access the sidecar containing the blob data. This explains why blob_versioned_hashes are included in transactions and why a KZG commitment is necessary.

Previously, rollups used calldata, allowing the EVM to access the calldata to execute the rollup transactions and prove their validity. However, once blobs are introduced and rollups start using them, the EVM no longer has access to the rollup data. Hence, the blob_versioned_hashes are included in the Signedblobtransaction, summarizing the blob data. By doing so, nodes can access the blob_versioned_hashes available on an EVM execution layer and cross-check them against the blob_KZG commitment at the consensus layer.

It is also noteworthy that Sidecar information containing blob data is deleted from the Beacon Chain after 30 days. This means that a blob’s DA is only guaranteed for a month, which Buterin explained is because the role of the consensus protocol is to provide "a highly secure real-time bulletin board," not to store data permanently. Storing blob data permanently on the chain would result in accumulating more than 2.5TB of data every year, potentially overwhelming the Ethereum network. On the contrary, the amount of data, about $20 per 1TB on an HDD, is manageable for individuals. Therefore, it would be ideal to split the responsibility of permanently storing blob data across various protocols that are more suitable for handling large data volumes, including rollups, API providers, decentralized storage systems (e.g. BitTorrent), explorers (Etherscan), and indexing protocols (e.g. The Graph). Moreover, since the execution payload data containing the blob_versioned_hashes data is still stored on the blockchain, it should be possible to prove the validity of the data that is over 30 days old against the original KZG commitment.

5. Impact of EIP-4844 on Rollup Costs

5-1. DA (L1 publication) costs currently account for over 90% of total rollup costs

Before examining the impact of EIP-4844 on the rollup economic model, it is essential to understand the revenue structure of rollups. The revenue generated by rollups is divided into two main components: network fees and MEVs. When we refer to revenue, we are specifically referring to operator/sequencer revenue, which is currently monopolized by the project's operating entity, given that all Rollups run on a single operator system.

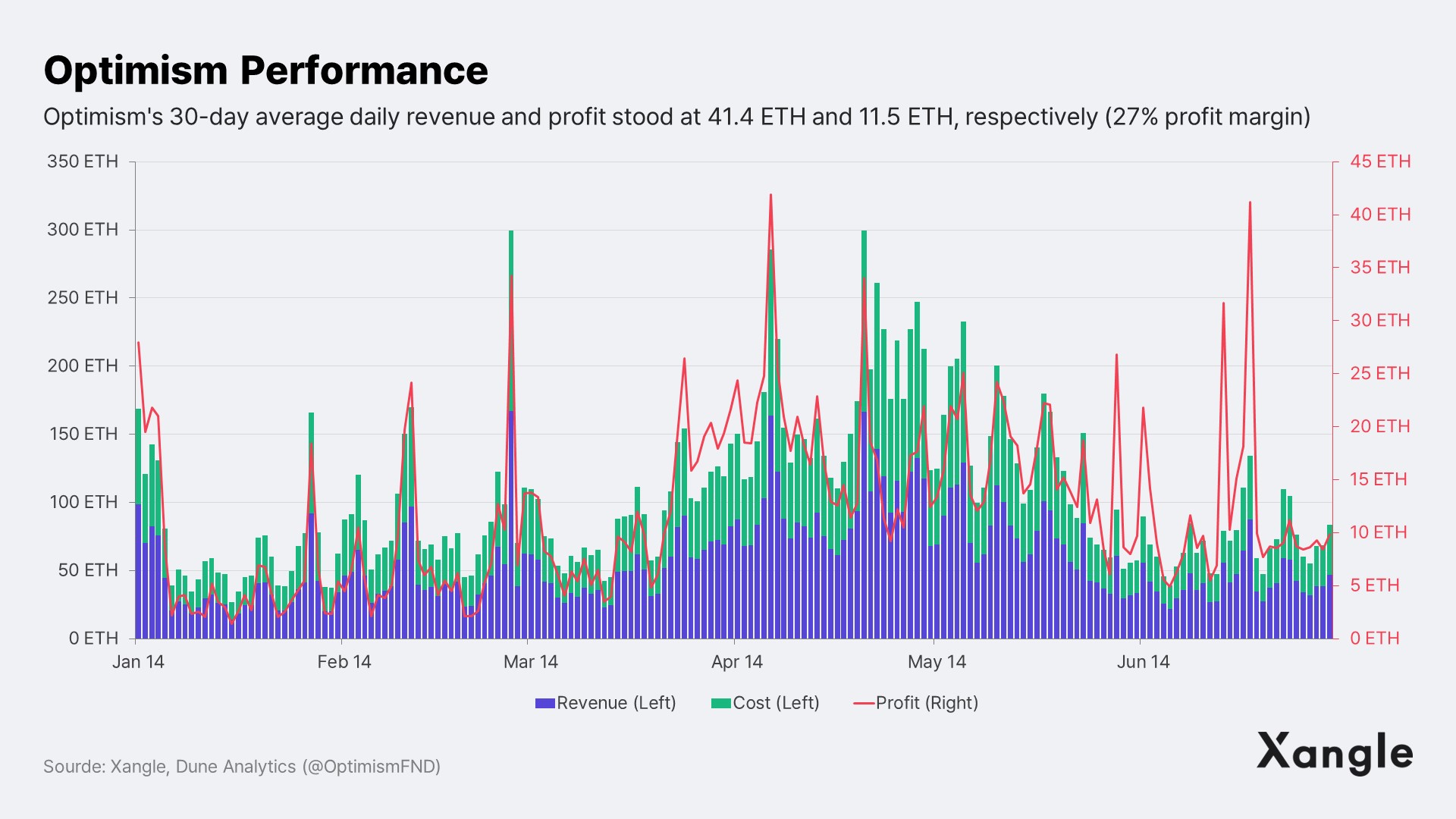

Network fee revenue consists of the gas fees that rollup users pay to the operator when making transactions, covering the cost of running the rollup plus the additional fee. Charging users the fee serves two purposes: to generate profit similar to any other business and to mitigate potential losses in the event of a sudden surge in gas costs at the base layer (operators collect gas fees from users before submitting batches to the base layer). As of July 13, Optimism's 30-day average daily revenue and profit stood at 41.4 ETH and 11.5 ETH, respectively, resulting in a 27% profit margin.

Many rollup projects, including Arbitrum, adopt a First Come First Serve (FCFS) basis, which means that MEV profits are virtually non-existent (though it may not be verifiable, the official stance of rollups is FCFS). As mentioned earlier, rollups currently function under a single operator system, and without competition in block generation, monopolizing MEVs could raise concerns about centralization. It is likely that these rollups will only benefit from MEVs once the operator becomes decentralized (for complementary reading, check out Xangle’s Shared Sequencer Network: A Middleware Blockchain for Decentralizing Rollups). Interestingly, Optimism appears to be leveraging MEV effectively, as priority fees are aggregated, constituting 10-20% of total transaction fees as of June 2023.

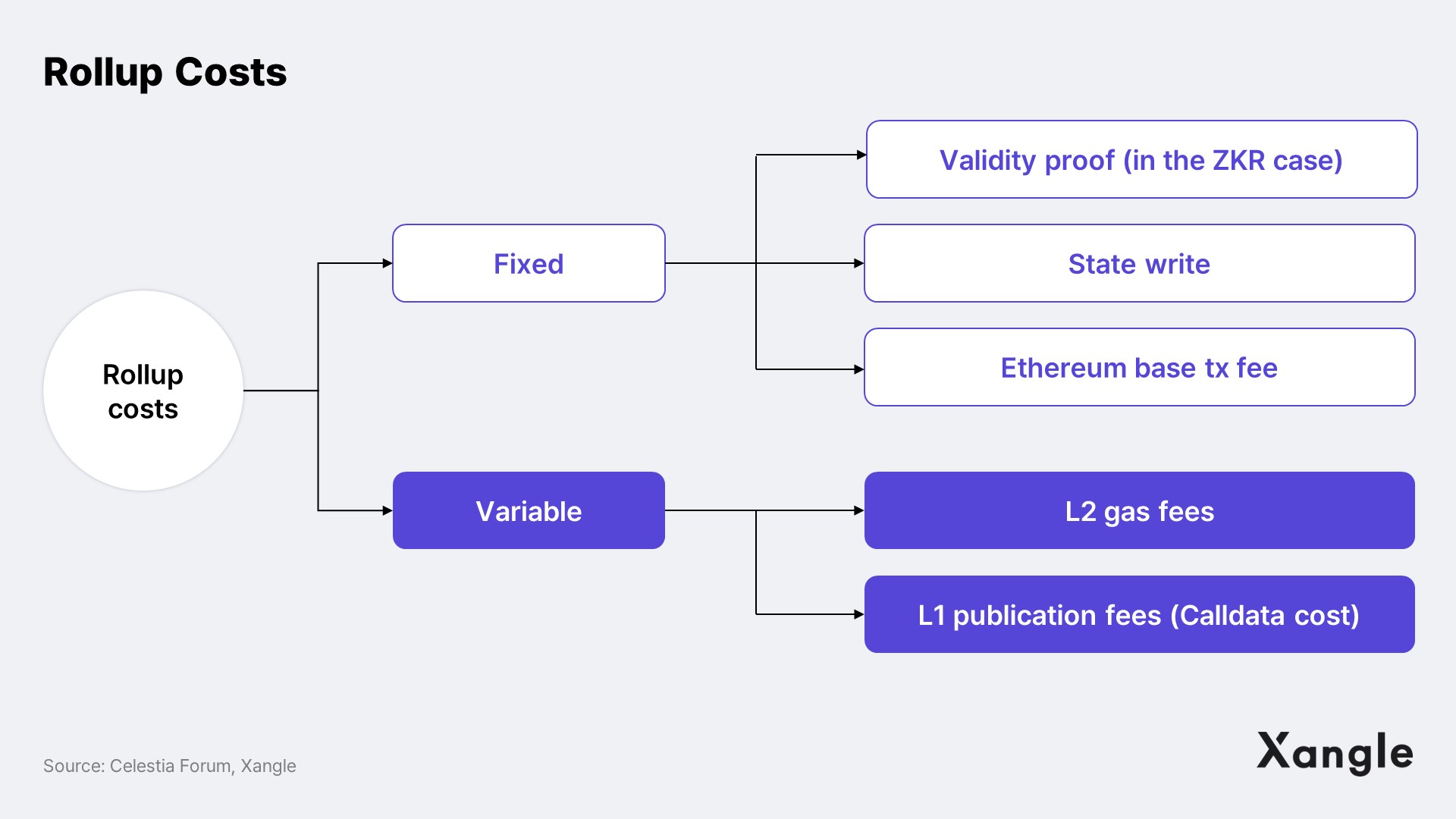

Rollup costs can be categorized into fixed and variable costs. The fixed costs encompass three components: 1) state write fee, incurred when submitting the state root to the rollup smart contract, 2) validity proofs (applicable for ZK rollups), and 3) Ethereum base transaction fee (21,000gas). On the other hand, the variable costs are divided into 1) L2 gas fee for transaction operation and 2) L1 publication fee for storing batch data in Ethereum blocks.

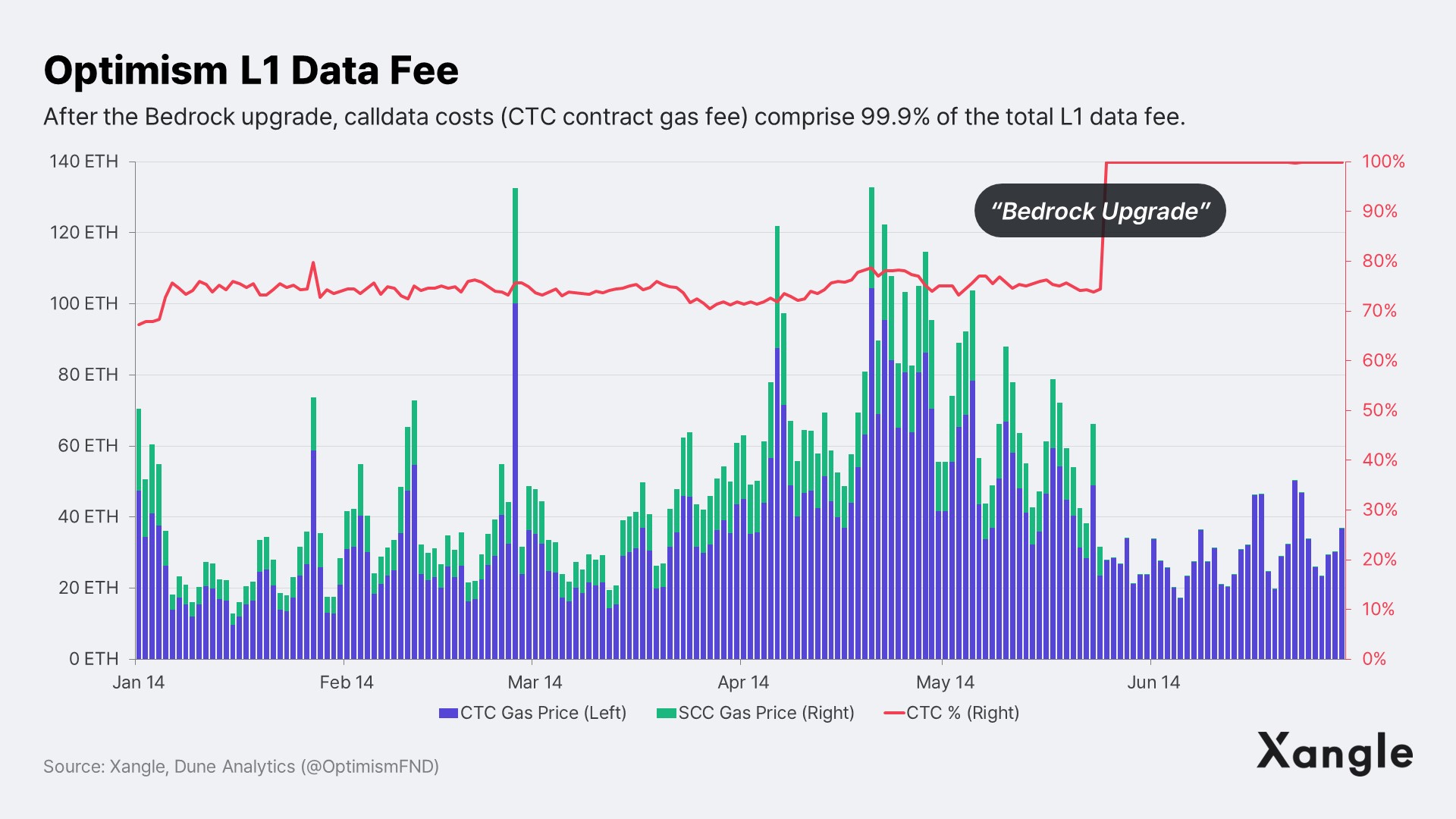

Currently, it's the second part of the variable cost is the dominant factor in terms of cost. Rollups use calldata to store batch data in Ethereum blocks, and the calldata fee is 4 gas/byte for zero bytes (0x00) and 16 gas/byte for non-zero bytes (we provided an explanation for rollup’s use for calldata in the annotation). While 16 gas/byte is considerably economical compared to using storage, it's important to consider that batch data is inherently substantial. As of February/March 2023, when demand for Optimistic rollups surged, Arbitrum and Optimistic were storing 14.6KB and 13KB of data per block, respectively. Consequently, the cost of calldata currently constitutes approximately 99% of the total rollup cost.

5-2. With the implementation of EIP4844, the DA cost of rollups is expected to be almost free, and a significant increase in the blob fee would require the demand for rollups to grow by more than 10 times

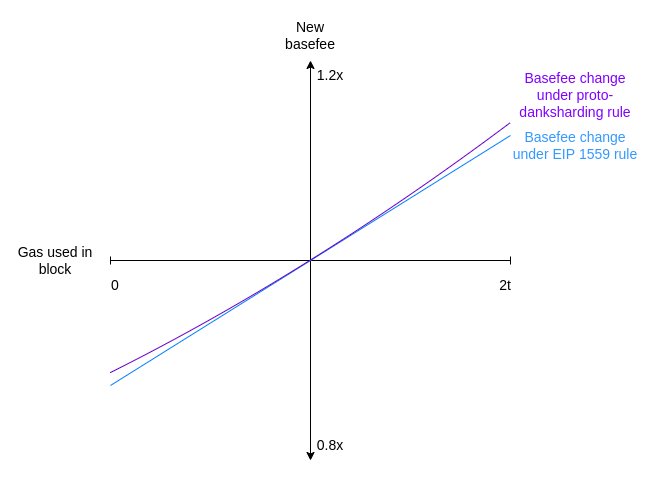

As per EIP-4844 parameters, blob fees (gas fees) will be dynamically determined by the supply/demand of blobs within its own data gas fee market, which is not affected by blockspace demand. Consequently, after the implementation of EIP-4844, Ethereum will operate with a two-dimensional EIP-1559 fee market consisting of 1) an EIP1559-based fee market for regular transactions and 2) a blob fee market where fees are determined solely by the supply and demand of blobs.

The functioning of the data gas fee market outlined in EIP-4844 is similar to the EIP-1559 mechanism. Blob transactions encompass all the necessary field values required by the EIP-1559 mechanism, including max_fee, max_priority_fee, and gas_used, with the addition of the max_fee_per_data_gas field indicating the user's priority fee. The data gas structure is also divided into base fee and priority fee, similar to EIP-1559. However, there is still an ongoing discussion about whether the base fee should be burned.

The functioning of the data gas fee market outlined in EIP-4844 is similar to the EIP-1559 mechanism. Blob transactions encompass all the necessary field values required by the EIP-1559 mechanism, including max_fee, max_priority_fee, and gas_used, with the addition of the max_fee_per_data_gas field indicating the user's priority fee. The data gas structure is also divided into base fee and priority fee, similar to EIP-1559. However, there is still an ongoing discussion about whether the base fee should be burned.

If the gas target per block of EIP-1559 is set at 15M gas and the max gas is 30M gas, the TARGET_DATA_GAS_PER_BLOCK of the blob would be 375kb and the MAX_DATA_GAS_PER_BLOCK would be 750kb. By default, the blob data is assigned 1 data gas per byte, and the minimum data gas price is set at 1 wei. Similar to EIP-1559, the base fee can vary up to -12.5-12.5% depending on how much data gas is actually used compared to the targeted data gas (TARGET_DATA_GAS_PER_BLOCK). In the initial stages of EIP4884 deployment, the usage of blobs is expected to be relatively low, even with the high network activity. As a result, the cost of the blob itself is likely to be low during this period.

The EIP-4844 gas fee mechanism is highly similar to EIP-1559 | Source: EIP-4844, Vitalik Buterin

So how significant is the cost reduction brought about by EIP-4844 specifically for rollups? For this analysis, we recommend the research report by dcrapis titled, "EIP-4844 fee market analysis." In the report, dcrapis examines the impact of EIP-4844 on the rollup economic model using the batch data from January-February deployments of Arbitrum and Optimism in 2023. Based on the analysis, the report draws the following conclusions (Arbitrum and Optimism data are used because their calldata usage represents 98% of all L2 calldata usage):

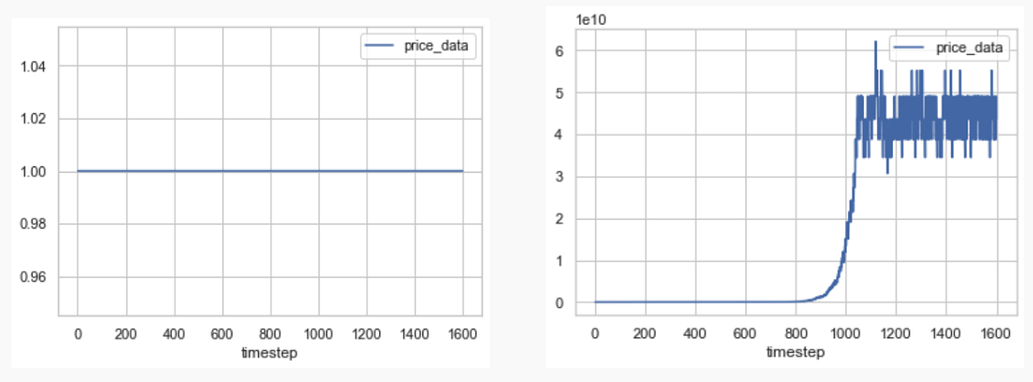

Conclusion 1. The current demand for blobs is about 10x lower than the target level (based on 250KB per block) and is estimated to take about 1-2 years to reach the desired level

- As of January-February 2023, Arbitrum generated 1,055 batches of ~99 KB in size every 6.78 blocks (equivalent to 100 MB per day, or 14.6 KB per block). During the same period, Optimism produced 2,981 batches of ~31 KB in size every 2.37 blocks (equivalent to 93 MB per day, or 13 KB per block). When combining the data generated by both rollups, the average size comes to 27.6 KB per block. Consequently, the current demand for the rollups represents only one-tenth of the targeted blob amount of 250 KB (based on having two blobs per block).

- In cases when the latent demand is higher than the blob target, EIP-4884’s price discovery mechanism leads to an escalation in the price of data gas price, while blob transactions with the lowest willingness to pay are removed to maintain price equilibrium. From January 2022 to December 2022, the total data demand for Ethereum rollups increased by approximately 4.4x. At this pace, which means that it would take a substantial 1.5 years for the price discovery mechanism to initiate and for the data price to exceed 1.

Projected evolution of data gas prices based on rollup demand | Source: EIP-4844 Fee Market Analysis

Conclusion 2. Fees will remain nearly zero until blob demand approaches the target level

- It takes about 1.89M gas to record one batch of Arbitrum to Ethereum. Assuming an average gas price of 29gwei and $ETH price of $1500, the DA cost per batch is about $85 and the calldata cost is about $70. When converting this to blobs, the cost to precompile is about $2.2 (50k gas) and the DA cost is $2e-10 (125k data gas), effectively making it virtually free. However, such a low cost could potentially lead to issues such as spamming or Ddos attacks.

Conclusion 3. In the event that the demand for blobs surpasses the target level, the cost of data gas will experience exponential growth, potentially increasing by more than 10x in a matter of hours

- Once the blob demand reaches the target price, the price of DATA GAS increases exponentially every 12 seconds until less willing transactions are dropped and demand decreases. As the demand for rollups is structurally inelastic and remains steady, this mechanism implies that costs can escalate by a factor of 10 or more in a short span of time. Consequently, blob consumers are expected to face significant challenges in dealing with soaring prices.

5-3. Changing the cost structure of blobs is being actively discussed within the Ethereum community

Given the potential issues outlined earlier, the Ethereum community is actively exploring options to change the cost structure of blobs. For example, Dankrad Feist proposed in EIP PR-5862 to change the minimum price of data gas from 1 wei/byte to 1 gwei/byte. However, there are concerns among certain groups, including Micah Zoltu, that raising the minimum price will make the DA (L1 publication) cost of rollups becoming excessively expensive again if the $ETH price increases by 100-1,000 times in the future (though I personally believe that a 1,000x increase in ETH price may not be an immediate concern). Another alternative being considered is lowering the data gas target per block from 3 blobs to 1.5 blobs. While this change might not entirely eliminate spamming, it offers the advantage of halving the time required to reach the target.

6. Closing Thoughts

EIP-4844 aims to reduce the DA cost of rollups using blobs and introduce some of the upgrades required to implement full Danksharding in advance. Once upgrades are completed at the consensus layer, such as PBS or DAS, following EIP-4884, full Danksharding can be implemented. However, it is important to note that Danksharding is still in the early stages of research and the Ethereum community has yet to establish a concrete specification. As a result, it is likely to be several years before we witness its actual implementation.

Proto-Danksharding aims to add three to six 125KB blobs per block. Considering the current average block size on Ethereum, which is around 120kb, this results in approximately three to six times more DA-only storage space per block (after full Danksharding, the number of blobs will increase to about 128 to 256, thereby freeing up 16 to 32MB of space per block).

Blob fees are expected to be significantly lower than calldata fees, and based on the current EIP-4844 specification and rollup demand, we anticipate that blob fees will be basically free. Given the trend in rollup demand over the past year, we estimate that it might take about one to two years for blob prices to reach price discovery. In the short to medium term after the introduction of EIP-4844, rollups could potentially offer the lowest transaction fees among all existing blockchains. However, some express concerns about potential side effects and propose either 1) higher blob fees or 2) a lower target number of blobs to expedite the price discovery process. Nevertheless, what remains certain is that EIP4844 will significantly contribute to the widespread adoption of rollups. As the crypto industry gains momentum with recent events such as the XRP ruling and major financial institutions like BlackRock and Fidelity filing applications for a spot Bitcoin ETF, the crypto industry is witnessing remarkable growth. It will be intriguing to see whether EIP-4844 can play a role in ushering in a “rollup summer.”

Annotation) Types of Ethereum data storage

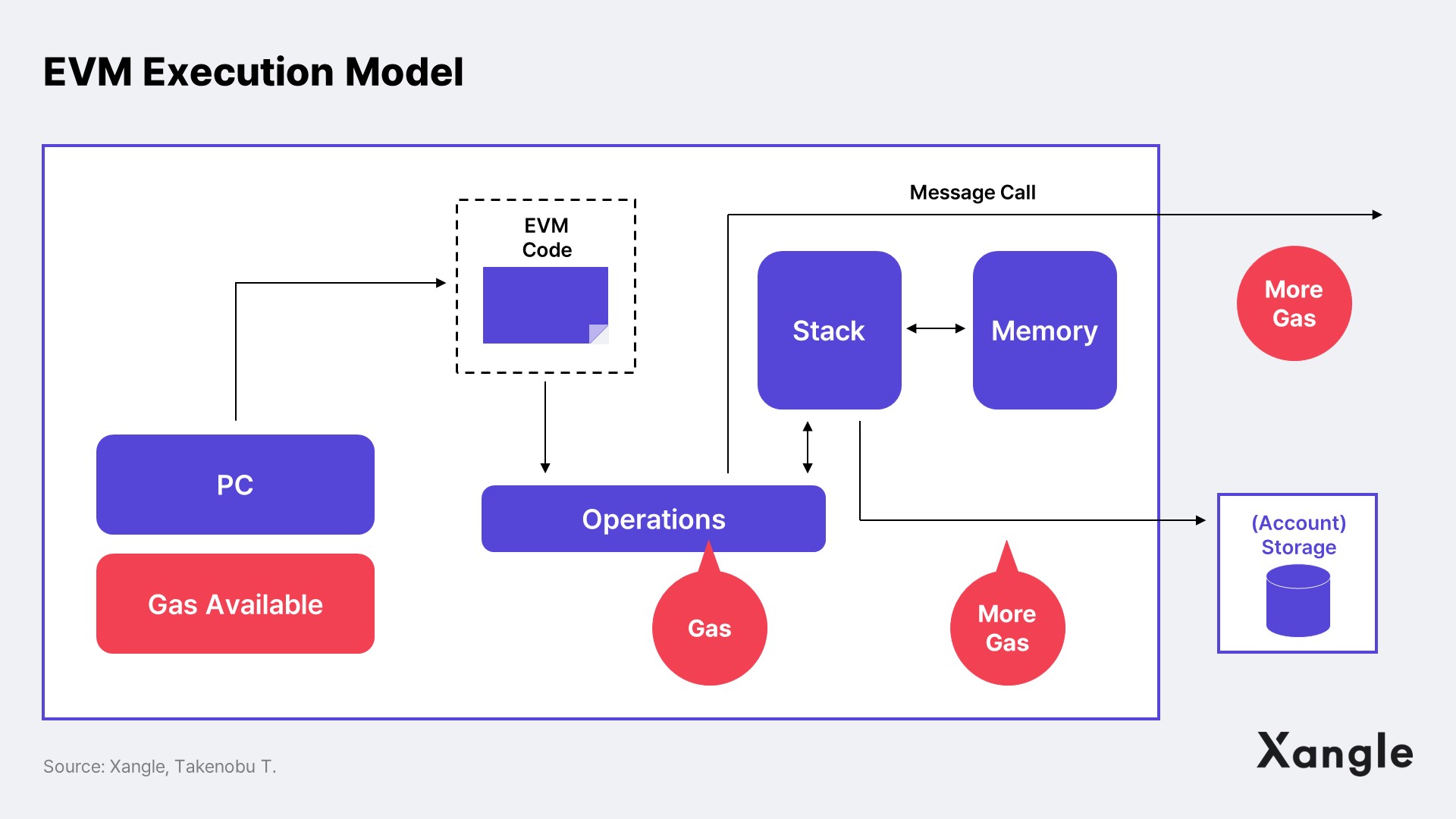

A smart contract is a computer program that runs on a blockchain and consists of functions and data called variables or parameters. The data used by these functions is stored in the computer's (EVM) memory, and the EVM's programming language, Solidity, has four main types of memory: storage, memory, stack, and calldata.

A1. The main storage spaces in EVM are categorized as Storage, Memory, Stack, and Calldata

First, storage is a space used to permanently store data in EVM, like a computer's file system (HDD/SDD). Unlike memory, which exists only while a transaction is executed, variables stored here can be read/write continuously. Each contract has its own storage space, and it can only read and write within its own storage. Storing data in storage is relatively expensive because it is permanently stored on the blockchain. Consequently, storage is primarily used to store the state of a contract, such as account balance, contract owner, or other information needed to execute the contract. Storage is organized into 2²⁵⁶ slots of 32 bytes, and all slots start with a value of zero. EVM provides two opcodes to interact with storage: SLOAD (to load a word from storage onto the stack) and SSTORE (to store a word into storage). Opcodes prefixed with an S indicate storage, while M indicates memory.

Memory is a temporary storage space that exists only for the duration of a function call, similar to RAM on a computer. Memory consists of readable and writable byte-array data and is volatile, meaning that message calls always begin with an empty memory. As memory is not permanently written to the blockchain, it incurs significantly lower gas costs compared to storage. This cost-effectiveness makes memory the preferred option for storing variables that are only required temporarily, such as function arguments, local variables, or arrays that are dynamically created during function execution. The EVM provides three opcodes to interact with memory: MLOAD (to load a word from memory to the stack), MSTORE (to store a word into memory), and MSTORE8 (to store a byte into memory).

The stack serves as a very short-term storage space used for immediate computation, storing small local variables. It is designed to have the lowest gas cost, but it also comes with a size limitation (with a maximum capacity of 1,024 * 256 bits, which is 262,144 bits). The stack is used to store small amounts of data during computation, including parameters for instructions and the results of operations. As a developer, you need not overly concern yourself with the stack because the Solidity compiler takes care of it unless you are using an EVM assembly. Nevertheless, the EVM does offer optional opcodes to modify the stack directly, such as POP, PUSH, DUP, and SWAP.

Note that in most cases, Solidity automatically manages the storage location for variables. Developers typically do not need to manage their own storage except for complex data types like structs or dynamic arrays.

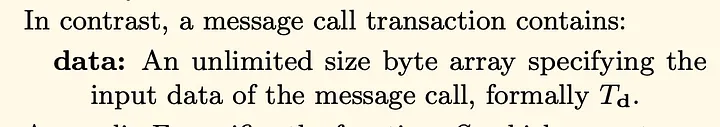

A2. The space used by rollups is called Calldata

Finally, the most critical EVM data storage space to understand in the context of EIP-4844 is calldata. Originally, calldata is a space for storing function arguments, particularly for functions that do not necessitate state changes such as view/pure functions. Calldata is read-only and typically constitutes a byte array containing the function selector and encoded parameters essential for a function call. Consequently, calldata is primarily used when making external function calls between contracts.

Similar to memory, calldata is temporary and stored only during the execution of a transaction. However, this does not imply that all data in the calldata is deleted. While the calldata itself is deleted from the EVM, the transaction that contains the calldata is effectively stored permanently because it is included in the Ethereum block. In other words, while the rollup data is inaccessible to the EVM after the transaction is executed, it remains recorded on the Ethereum blockchain and can be accessed by anyone at any time.

As such, calldata offers two distinctive advantages: 1) it is inexpensive to use due to its read-only nature (4 gas/byte for zero bytes and 16 gas/byte for non-zero bytes) and 2) it has no data size limit, which is highly advantageous when dealing with large data sets. This is why rollups utilize calldata to store their block data in Ethereum.

Source: Ethereum Yellow Paper